Video systems

Outside broadcasts

Nick Flaherty examines the developments driving the emergence of more powerful and better quality video systems for uncrewed vehicles

(Courtesy of Skypersonic)

The technology of vision systems in the uncrewed sector is facing several competing demands. In some cases, it is for low latency, responsive video streams from multiple cameras to allow a remote operator to drive the platform safely, whether in the middle of the ocean or on the surface of Mars.

In others, machine learning (ML) inference has to identify elements within a video frame, either to send to an operator or for the decision-making algorithms on the platform. Yet other applications require ultra-high resolution video feeds from ever-smaller gimbals, demanding the latest video encoding technologies.

Maturing silicon technology is enabling more compact video processing systems, with the latest encoders integrated into hardware to reduce the size, weight and power. Also, a new generation of graphics processor unit (GPU) is enabling more AI processing with existing ML frameworks for processing the video. New architectures for ML, both digital and analogue, are also reducing the power consumption of chips to the point where it is practical to run large frameworks in the air.

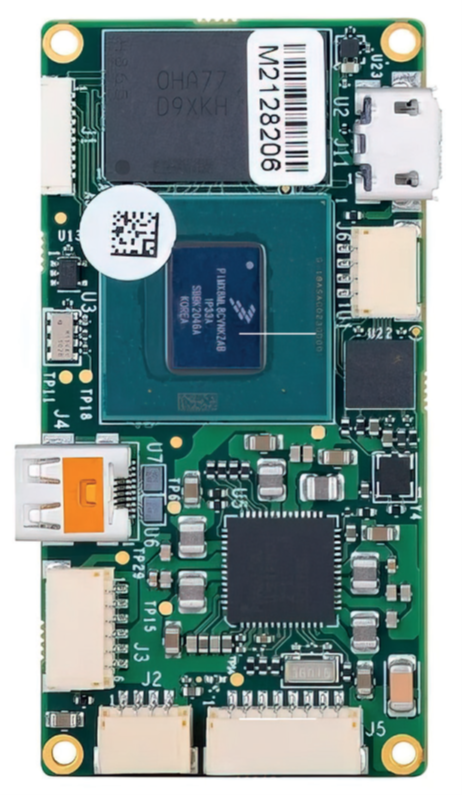

UAV video systems are seeing demand for up to eight standard definition (SD) image sensors, or five if there is a mix of high definition (HD) and SD. For example, this could be using one HD sensor to zoom into images, with multiple night vision feeds.

These can be implemented on a board measuring 50 x 25 mm for small gimbals with motherboard, with two daughter boards that can be swapped in and out for different types of camera. This provides either two encoding streams of 1080p60 HD video or one HD encoding channel with four SD channels for outputs using composite video, HDMI and low-voltage differential swing (LVDS).

The processor uses four Cortex-A53 microprocessor cores running at 1.5 GHz with 1 Mbyte of L2 cache. That allows the chip to run operating systems such as Linux with the video codecs needed for the video decode 4Kp60 H.265 or 1080p60 H.265 as well as older codecs such as VP9, VP8 and H.264. There is also a smaller 266 MHz Cortex-M4 core for more deterministic real-time operation.

The CSI-2 format from the MIPI Alliance is becoming more popular as the interface (see sidebar: Interface standards). This is a variant of a serial/deserialiser (SerDes) interface that takes a parallel interface and combines the signals onto up to four highspeed serial lines.

That makes it simpler to route the serial channels, for example over a gimbal interface. Cameras with MIPI CSI-2 are able to remove the other interfaces, reducing the size of the video board, particularly in UAVs, allowing smaller gimbal designs.

This also avoids issues with the LVDS interface, which has multiple variants. That means boards need to be tweaked for individual cameras, which is not an issue when using CSI-2.

Remote operation

For remote control of an uncrewed system, low latency is vital. The operator needs to see the video with as small a delay as possible to ensure that the control is accurate.

The latency in the video system consists of several elements. There is the time taken to encode the video, the time taken to send it over the network – which could be highly variable if the internet is used – and the decoding time in the video player the operator uses.

These are often tackled separately, although system developers also look at the entire signal chain to minimise the latency, using forward error correction to help maintain a steady flow of data. This consumes only a little bandwidth, adding information for repairing problems when streaming.

The capture typically takes 15 ms for a 60 Hz signal from the image processor, with encoding taking 5-10 ms. That can vary depending on the complexity of the images, which does not help with providing a deterministic data flow.

The Hantro codec, which is present on a number of popular chips, is a key piece of the signal chain. It is a stateless accelerator that does not need firmware to operate, making it more robust and better suited for open source platforms, where having full control over the system is desirable to achieve the desired latency.

In this case, the support is split in two: a kernel driver, which is provided by a Linux Hantro driver, and a user component that can be provided by frameworks such as GStreamer and FFMPEG.

This has been used for H.265. Unlike the currently supported codecs (JPEG, MPEG-2, VP8 and H.264), it doesn’t rely on the G1 hardware block but on the second video processor unit, the G2. For this first step, the driver supports the basic H.265 features up to level 5.1.

Enhanced features such as 10-bit depth per sample with 4:2:0 chroma sampling, scaling or tile decoding could be added later. Another possible development is to use compressed buffers to limit the memory bandwidth consumption.

Then there is the transmission. Dynamic encoding can adjust the signal level of the RF link by using tables of reference data to adjust the frame rate, bit rate and resolution dynamically. This ensures some video arrives at the right time regardless of the flying conditions and the radio link.

(Courtesy of Nvidia)

For example, another technology for low-latency encoding is adaptive encoding optimised across the available network bandwidth for remote control of a UGV or UAV in almost real time (a latency of 400 ms) from a remote location.

When using the internet there are other considerations. With standard routing, the IP packets can be sent via many different routes, waiting at the destination for all the packets to arrive and then making sure they are in the right order. That can create a highly variable latency.

However, virtual private networks (VPNs) can be set up with dedicated servers at each end of the link that can send the packets over pre-established routes. That provides much greater reliability for sending packets and much lower latency.

The final element for low latency comes from the efficiency of encoding and decoding the stream, then transferring the video to the screen. This is down to the design of the software running on the client, usually a tablet computer, and how the data is fed from the memory through the processor to the display.

All of this has been combined to demonstrate a UAV being remotely piloted using a standard mobile phone. An operator in Orlando, Florida, controlled a UAV inspecting a factory in Turin, Italy, over a distance of 4800 miles (UST 45, August/September 2022). The end-to end signal latency was 68 ms, allowing safe piloting via the video feed alone. The radio controller of the UAV was connected to a PC with software that optimised the packets over a dedicated VPN link via the mobile phone.

Previous flights had relied on a more sophisticated, non-mobile internet connection, but the demonstration showed that an internet link via a mobile phone in the vicinity of the UAV is all that is needed to pilot the vehicle remotely from virtually anywhere.

The technology is also being used for the next generation of missions to Mars. A 15-day test on Mount Etna, an active volcano in Italy where the landscape is similar to Martian geology, allowed both a UAV and a UGV rover to be controlled from personnel in Houston, Texas, in real time without the need for data from a GNSS satellite network, which of course is not available on Mars.

AI accelerators

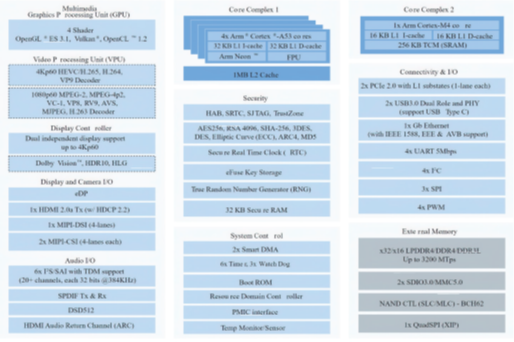

There are several options for AI processing of video content for uncrewed systems, whether in the air or on the ground. The latest GPU chip runs popular image processing AI frameworks as well as the ROS2 operating system and Yocto Linux. The chip provides up to 275 TOPS – eight times the processing power of the previous GPU chip – in a module with the same pinout.

(Courtesy of SpaceIL/Maris-Tech)

The production systems are supported by a software stack with development tools and libraries for computer vision, and tools to accelerate model development with pre-trained models.

More than 350 camera and sensor options are available for the module to support challenging indoor/outdoor lighting conditions, as well as capabilities such as Lidar for mapping, localisation and navigation in robotics and autonomous machines.

On-chip AI

The AI boards are popular for UGV designs where power consumption is less of an issue, but developers also want to use AI on UAVs. That is driving more interest in low-power AI chips.

The leading contender for this is an AI chip with a proprietary architecture with three types of blocks with control,

memory and computing. These are assigned to various layers of the neural network framework and are designed to keep the data on chip. The chip has a performance of up to 26 TOPS with a power consumption of 6-7 W, which makes it suitable for UAV applications.

The chip is used on a board called Jupiter alongside a mainstream video controller chip. The board measures 50 x 50 mm and is set to be part of the Beresheet 2 Lunar Mission for an Israeli lunar spacecraft.

Beresheet 2 is a three-craft mission to the Moon: one orbiter and two landers. The orbiter is planned to launch from Earth while carrying the two landers, arrive at the Moon, safely release the landers at different times and orbit the Moon for several years. The landers are planned to be released from the orbiter and land at different sites on the Moon. Each spacecraft is performing at least one scientific mission.

The Jupiter board will be used for the landers and the orbiter to oversee camera operation, image processing and additional processing including hosting a landing algorithm.

Using a separate video encoder and AI chip allows a single-frame buffer to supply both the encoder in the video chip for transmission and AI chip for image identification.

This architecture also opens up the possibility of transcoding by passing the decoded video to the AI chip and re-coding for lower bandwidth using AI techniques. This is key for using NASA’s low-bitrate Deep Space Network that is used to send signals from the Moon.

Analogue in memory AI

Using an analogue AI inference technique has reduced the power consumption from 8-10 W to 3-4 W, allowing such devices to be used on UAVs.

It uses a memory technology to store analogue values for the weights for the AI framework, avoiding the need to keep going off-chip to external memory, thereby cutting the power consumption.

One reference design for a UAV uses an analogue AI chip that measures 19 x 15.5 mm in a ball grid array package. This has been mounted on an M.2-format board measuring 22 x 80 mm for image processing, and provides a four-lane PCIe 2.1 interface with up to 2 Gbit/s of bandwidth to the system CPU, as well as 10 general-purpose I/Os, I2Cs and UARTs to link to other parts of the UAV.

The analogue AI chip has 76 analogue tiles that handle the ML frameworks in a more power-efficient way than a digital GPU. Because the ML frameworks are executed in a different way to the digital chips, the software compiler is key.

Deep neural network models developed in standard frameworks such as Pytorch, Caffe and TensorFlow are optimised, reduced in size and then retrained for the analogue AI chip before being processed through a specific compiler. Pre-qualified models such as the YOLOv3 image recognition framework are also available for developers to get started.

The resulting binary code and model weights, with around 80 million parameters, are then programmed into the analogue AI chip to analyse the images coming from the camera on the reference platform to identify key elements. This combination of hardware and software allows execution of models at higher resolution and lower latency for better results, and even allows multiple frameworks to be used in the chip at the same time.

(Courtesy of Renesas Electronics)

Integrated AI

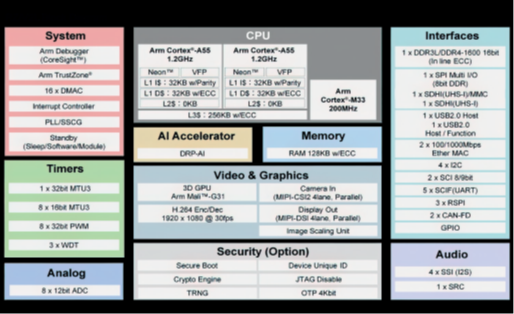

With the increasing popularity of AI accelerators, the technology is also being integrated into the chips alongside the encoders. One controller now includes a proprietary AI engine called a Dynamically Reconfigurable Processor (DRP-AI) alongside an ARM Cortex-A55 1.2 GHz processor core and a video encoder that can handle 1080 video at 30 frames per second with H.264 coding.

The AI engine reduces processing time and power consumption by pre-processing images to reduce the CPU’s workload, and can accelerate multiple algorithms in a single application and offload the main processor for specialised tasks. New configurations can be dynamically loaded into the engine in 1 ms.

The AI engine provides both real-time AI inference and image processing functions with the capabilities essential for camera support such as colour correction and noise reduction. This enables customers to implement AIbased vision applications without requiring an external image signal processor.

Software elements for the engine use downloadable configuration code for the programmable data path hardware and state transition controller (STC), and complex algorithms are broken down into smaller ‘contexts’ implemented in the programmable data path hardware.

The STC switches between individual operations during processing, and changes the next required operation in a single clock cycle.

This provides a configurable data path processing hardware for implementing complex algorithms, which are available in a library of functions. Developers can work with design partners to develop custom libraries.

The power efficiency of the AI engine eliminates the need for heat dissipation measures such as heat sinks or cooling fans.

The chip has a more traditional HDMI interface than MIPI CSI-2, but that allows it to be package- and pin-compatible with current video encoder devices. This compatibility allows vision system board

makers to upgrade easily from one with an encoder chip to one with an encoder plus AI without having to modify the system configuration, keeping migration costs low.

Conclusion

(Courtesy of Aries Embedded)

The demand for higher resolution image sensors, more cameras, AI analysis and remote operation is driving the

development of both silicon chips and boards for vision systems. Increasing the performance of the encoders for H.264 and H.265 is improving the quality of images being transmitted, and is offering more flexibility for managing the bandwidth of links. AI on a UAV or UGV is also reducing the need to send huge amounts of data back to an operator for analysis.

New optimisation techniques across the entire transmission chain, including the internet, are enhancing the remote control of uncrewed platforms in the air, on the ground, and on other planets.

Acknowledgements

The author would like to thank Israel Bar at Maris-Tech, Les Litwin at Antrica, Evert van Schuppen at Ascent Vision and Andreas Widder at Aries Embedded for their help with researching this article.

Encoding standards

H.264

The Advanced Video Coding (AVC) standard, also called H.264 or MPEG- 4 Part 10, was drafted in 2003 and is based on block-oriented, motioncompensated coding. It supports resolutions of up to and including 8K Ultra HD (UHD) by using a reducedcomplexity integer discrete cosine transform (integer DCT) variable blocksize segmentation, and predicting motion between frames.

It works by comparing different parts of a frame of video to find areas that are redundant, both within a single frame and between consecutive frames. These areas are then replaced with a short description instead of the original pixels.

The aim of the standard was to provide enough flexibility for applications on a wide variety of networks and systems, including low and high bit rates, low and high resolution video, broadcast and IP packet networks, hence its popularity.

H.265

High Efficiency Video Coding (HEVC), also known as H.265 or MPEG-H Part 2, was drafted in 2013. It provides better data compression of 25-50% over H.264 at the same level of video quality, or much improved video quality at the same bit rate. It supports resolutions up to 8192 x 4320, including 8K UHD.

While AVC uses the integer DCT with blocks of 4 x 4 and 8 x 8 pixels, HEVC uses variable block sizes between 4 x 4 and 32 x 32 depending on the complexity of the image and the motion estimation. This provides more compression for simpler images that don’t change quickly, and so reduces the overall data rate.

The primary changes for HEVC include the expansion of the pattern comparison and difference-coding areas from 16 x 16 pixel to sizes up to 64 x 64, with an additional filtering step called sample adaptive offset filtering.

Effective use of these improvements requires much more signal processing capability for compressing the video, but has less impact on the amount of computation needed for decompression.

H.266

Versatile Video Coding (VVC), also known as H.266, ISO/IEC 23090- 3 or MPEG-I Part 3, was finalised in July 2020. As with AVC and HEVC, it aims to improve the compression performance and support a broad range of applications.

VVC has about 50% better compression for the same image quality than HEVC, which would take an SD feed down to around 500 kbit/s, although it also has support for lossless and subjectively lossless compression. It supports resolutions ranging from very low resolution up to 4K and 16K with frame refresh rates of up to 120 Hz.

It also supports more colours (YCbCr 4:4:4, 4:2:2 and 4:2:0) with 8-10 bits per component, a wide colour gamut and high dynamic range with peak brightness of 1000, 4000 and 10000 nits. Work on high bit-depth support (12 and 16 bits per component) began in October 2020 and is ongoing.

However, VVC has yet to be widely adopted in hardware for robotic systems. That is mainly because the complexity of the encoder is 10 times that of HEVC, so an encoder would have to be more than 10 times larger or 10 times faster, and would consume 10 times more power in the same technology as the HEVC encoder.

Two generations of chip-making technology will increase the density of transistors by a factor of four, and increase the speed by a factor of two, enabling an H.266 encoder in a suitable power envelope. This could be possible in a leading-edge chipmaking process, although the cost of the chips would be prohibitive.

Interface standards

The latest version of the MIPI CSI-2 interface provides low-power operation in UAVs. Introduced in 2005, it has become popular as the interface from an embedded camera to a processor.

It is typically implemented for shorterreach applications on either a D-PHY or C-PHY physical-layer interface, which converts the parallel data from the raw image from the camera sensor into a high-speed serial link, or lane, and deserialises it at the other end, hence SerDes (see main text).

D-PHY has been widely adopted for several years, and provides up to 4.5 Gbit/s per lane, although that is set to increase to 9 Gbit/s per lane to support larger image sensors with higher resolution, which requires more bits to be sent.

D-PHY is a source synchronous architecture, with a dedicated clock lane and two operating modes for efficiency.

One is a high-speed mode with differential signalling for data transfer, the other is a low-power mode with single-ended 1.2 V signalling for control and handshake data.

MIPI C-PHY, introduced in 2014, uses a three-wire link and three-phase encoding to provide a total bandwidth of up to 6.0 Gsymbols/s across all three wires.

The encoding and mapping of the signals ensure that the three wires are synchronised, which helps with the clock and data recovery on the receiver as the physical layer doesn’t have a dedicated lane for the clock but uses an embedded clock.

Because these are both developed under the MIPI Alliance, they can be combined to coexist on one set of pins, reusing most of the circuits except for the line drivers and receivers. That means one PHY block in a chip can operate in either C-PHY mode or D-PHY mode, depending on what the image sensor is using.

V4.0 is the first to support transmission of CSI-2 image frames over the low pin-count 3C twowire interface. CSI-2 can also be implemented over the A-PHY longreach SerDes interface (up to 15 m) for autonomous vehicles.

CSI-2 V4.0 also adds multi-pixel compression for the latest generation of advanced image sensors and RAW28 colour depth to improve the image quality and superior signal-to-noise ratio.

An Always-On Sentinel Conduit feature enables a vision signal processor (VSP) in a subsystem to continuously monitor its surrounding environment and then trigger the higher-power, host CPUs only when significant events happen. Typically, the VSP will be either a separate device or integrated with the host CPU within a larger chip.

This architecture can be used for visionbased vehicle safety applications, and enables image frames to be economically streamed from an image sensor to a VSP over a low-power MIPI I3C bus.

The RAW28 pixel encoding supports the next generation of high dynamic range automotive image sensors for applications such as those required for ADAS and other safety-critical applications.

CSI-2 v4.0 is backwards compatible with all previous versions of the MIPI specification.

Examples of video systems manufacturers and suppliers

AUSTRALIA

| AVT | +61 265 811 994 | www.ascentvision.com |

CHINA

| HiSilicon | +86 21 28780808 | www.hisilicon.com |

FRANCE

| Vitec | +33 1 4673 0606 | www.vitec.com |

GERMANY

| Aries Embedded | +49 8141 363670 | www.aries-embedded.com |

ISRAEL

| GetSat | +972 7 6530 0700 | www.getsat.com |

| Controp | +972 9 744 0661 | www.controp.com |

| Maris-tech | +972 72 242 4022 | www.maris-tech.com |

JAPAN

| Thine Electronics | +81 3 5217 6660 | www.thine.co.jp |

| Renesas Electronics | +81 3 6773 3000 | www.renesas.com |

THE NETHERLANDS

| NXP Semiconductors | +31 40 272 8686 | www.nxp.com |

UK

| Antrica | +44 1628 626098 | www.antrica.com |

| Vision4ce | +44 1189 797904 | www.vision4ce.com |

USA

| Ascent Vision | +1 406 388 2092 | www.ascentvision.com |

| Curtiss-Wright | +1 704 481 1150 | www.curtisswright.com |

| Delta Digital Video | +1 215 657-5270 | www.deltadigitalvideo.com |

| EIZO | +1 407 262 7100 | www.eizorugged.com |

| Intel | +1 916 377 7000 | www.intel.com |

| MAPIR | +1 877 949 1684 | www.mapir.camera |

| missionGO | +1 410 390 0500 | www.missiongo.io |

| Nvidia | +1 408 486 2000 | www.nvidia.com |

| OmniVision | +1 408 567 3000 | www.ovt.com |

| Qualcomm | +1 858 587 1121 | www.qualcomm.com |

| SightLine Applications | +1 541 716 5137 | www.sightlineapplications.com |

| Socionext | +1 408 550 6861 | www.socionext.com |

| Triad RF Systems | +1 855 558 1001 | www.triadrf.com |

| UAVOS | +1 650 584 3176 | www.uavos.com |

| VersaLogic | +1 503 747 2261 | www.versalogic.com |

| Videology | +1 401 949 5332 | www.videologyinc.com |

UPCOMING EVENTS