uWare’s uOne UUV

The free-moving uOne can do it all – hover, accelerate, change direction and gather data energy-efficiently – through quick, shallow water missions. Rory Jackson reports

Many of today’s most successful UAVs leverage precise flight and hover controls with valuable payloads to unlock new forms of data gathering and intelligent behaviour across survey, indoor inspection and cinematography operations.

Belgium-based uWare Robotics is one of a new breed of AUV manufacturer that is specifically aiming to offer an autonomous submersible that replicates these functions of multi-rotor air vehicles in a marine environment, navigating freely in all directions, and hovering with the agility and dynamism of a multirotor UAV to survey and inspect underwater.

That product offering is the uOne, a 50 x 40 x 40 cm AUV. Electric battery-powered with eight thrusters, it has a maximum speed of 3 knots (0.5 knots cruise) and endurance of two to three hours between battery swaps.

The system weighs 18 kg in the air (with up to 5 kg of payload capacity). As a light-duty, easily deployable micro-AUV, it is currently depth-rated to 75 m, operates in waters with temperatures of 0-35 C and can be deployed within 10 minutes by a single operator.

That makes the uOne easier to deploy than many other AUVs; it additionally operates primarily using visual navigation, integrating a frontal stereo camera as well as a bottom-facing one, with robust decision-making algorithms to allow precise and repeatable operations even in unchartered waters.

This has been achieved while keeping the AUV cost-effective and hence more accessible to a wide range of users, and ongoing advances in the uOne’s decision-making will enable it to autonomously identify and prioritise points of interest during inspections – essentially thinking and acting with the intelligence of a human diver.

From concept to reality

The uOne originated from an idea for an underwater robot that would follow a diver as they swam around, videoing them and recording their activities, as well as any surrounding biodiversity and terrain.

This concept came from tech entrepreneur Christophe Chatillon, founder and now chairman of the board at uWare. The first brainstorming session to flesh out the hardware and software required to bring this idea to reality occurred during what was meant to be a job interview for robotics engineers Sayri Arteaga Michaux, co-founder and CEO, and Meidi Garcia, co-founder and lead engineer for data and AI.

“Christophe had huge previous experience and success developing software, but what he lacked was robotics engineering skill and how to apply software in that field,” Arteaga recounts.

“The interview quickly turned into us talking about how we’d engineer this robot. Our ideas were exactly in-sync with what he wanted – he’d just lacked the precise terms or knowledge to start engineering it.”

Niche work

As the three developed the design, they came to better understand the needs and challenges of professionals working underwater, and they determined that a vast niche existed for something between ROVs operated via tether in shallow waters and AUVs working in the deep sea for weeks at a time.

In particular, a wide range of industries operating in coastal waters – including marine research, infrastructure inspection, ports, shipping and construction – require regular visual inspection of their underwater assets. The current practice predominantly involves the use of divers or ROVs for visual examination and assessment. However, deploying divers carries inherent risks and tends to be slow, which can escalate the overall inspection cost.

Additionally, while ROVs mitigate some risks, their movement is limited due to being tethered, and they require skilled operators to avoid getting entangled on other objects while efficiently gathering high-quality data. Furthermore, traditional torpedo-shaped AUVs, commonly employed in underwater exploration, are not ideally suited for navigating efficiently in or around complex structures, nor can they hover to gather data effectively. These limitations, coupled with their typically high cost, further complicate their use in detailed and precise marine inspections.

“We came to realise that the robot we were developing should be a smart, agile, cost-effective and easy to deploy solution, which could change the way asset owners intervene underwater. Having a small, one person-portable, autonomous robot liberates you from needing diver teams, very skilled operation teams, cranes, tethers and much more,” Arteaga explains.

“Combining autonomous robotics with a software platform capable of very advanced data analytics liberates the user from onerous data work. No-one has to be a robotics engineer or data scientist to get all the useful subsea data reports they might need. Our last demonstration of the uOne took just 10 minutes to show step-by-step how the AUV works, and that anyone can put the robot underwater, plan its mission and get visualisations of their data in less than a day.”

Development strategy

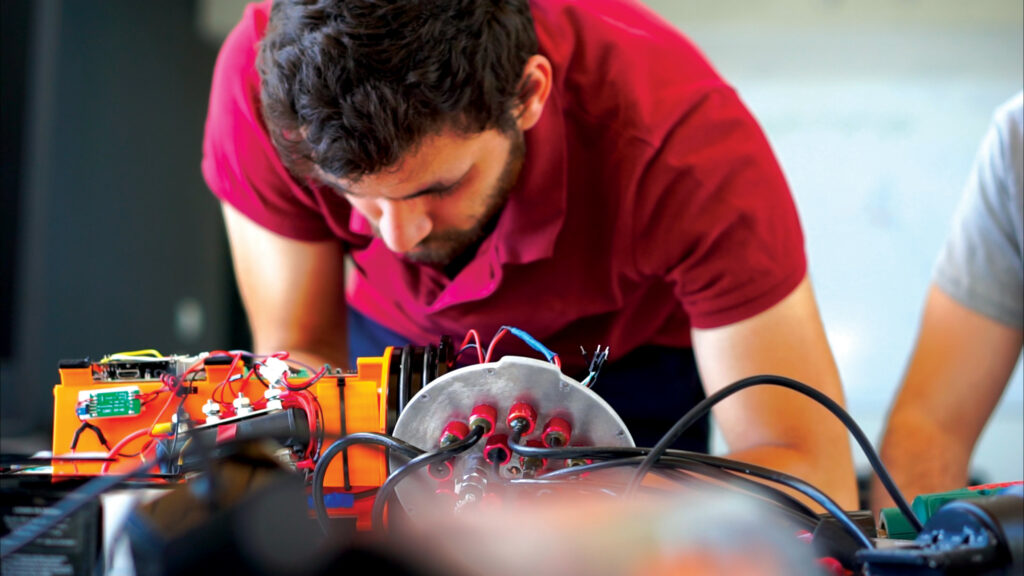

Rapid iterative development of the robot followed that first meeting between the three co-founders. The initial phase of development, spanning a little over a year from September 2018 to October 2019, focused on extensive hardware development. Crucially, it involved testing and validating the main design choices in real-world environments.

Among the key decisions were the adoption of an eight-vectored thruster configuration, which greatly enhanced manoeuvrability and stability, and the design of a slimmer yet robust modular frame to protect the watertight enclosure holding all the internal electronic systems.

“Each iteration brought significant mechanical modifications from its predecessor, focusing primarily on the robot’s movement and essential operating functions. These progressive versions enabled us to experiment with and validate various approaches to optimise the system for our specific use case, simultaneously facilitating the development of our more advanced software algorithms,” Garcia says.

Arteaga and Garcia then embarked on developing advanced software algorithms from early 2019.

“By the end of 2022, we had significantly increased the robot’s intelligence. Starting with thruster-control strategies, we progressed to cutting-edge localisation and navigation algorithms. The high point of this phase saw the integration of advanced decision-making capabilities, empowering the robot to autonomously navigate and adapt to unexpected environmental changes and obstacles,” Arteaga recounts.

The graphical user interface (GUI) of the robot evolved in parallel with its growing autonomy. It now allows users to program missions with varying levels of environmental knowledge. The GUI provides options ranging from precise GPS waypoint navigation to broad area-exploration commands, reflecting the robot’s sophisticated level of autonomous operation.

Alongside the core development of hardware and software, the pair also put considerable effort into advancing their data capabilities, with a particular focus on enhancing the machine-learning algorithms. Every project undertaken enabled them to gather more data, which could then be used to refine and improve the algorithms.

“One of the biggest challenges any company faces in performing underwater visual inspections is the scarcity of data; thankfully, we’ve spent much of the past five years diligently collecting underwater data,” Garcia says.

System architecture

Key constraints influencing the uOne’s internal arrangement and mounting of subsystems are its cylindrical watertight enclosure, EMI and heat dissipation.

“Within the cylindrical body, our main CPUs [central processing units] are very close to our IMU [inertial measurement unit]. That isn’t inherently problematic, but then there’s also our powerpack to consider, and all the current running from that to our thrusters, just a couple of centimetres from our magnetometer,” Arteaga says.

“We would sometimes have spikes of 150 A, a few centimetres from our navigation sensors. To mitigate that, we’ve optimised the interior architecture such that navigation sensors are at the top of the cylinder, touching the frame, and high-power cables run in the cylinder’s nadir – so the sensors and power cables are as far apart as possible.

“On top of that, the high-power wires are EMI-insulated. We validated that setup in various tests and noticed those two measures were enough to ensure our navigation accuracy target,” he adds.

Notably, the fourth version of uOne had a separate battery enclosure below the main electronics enclosure. This approach can be seen in some ROV designs, particularly those whose engineers like the passive stabilisation benefits of keeping the centre of gravity (CoG) low.

Agile movement

In the fifth and final uOne, however, which was designed not for passive stabilisation but equal freedom of movement in all directions, keeping the battery below the main housing would have constricted the AUV’s ability to pitch and roll. Hence the battery is mounted in the middle of the hull.

Sacrificing passive stabilisation means the AUV can hold any position and orientation with minimal thrust, power and hence energy consumption, assuming nothing is touching or pulling it (including underwater currents).

“The way uOne moves is very unusual and unexpected for any kind of underwater vehicle. Some sensors customised specifically for us just wouldn’t work, because IMUs and DVLs [doppler velocity logs] for the underwater space often have filters that omit readings of our kinds of movements as errors, even though they are precisely what we are trying to pull off – to have the agility of a UAV in an underwater drone,” Arteaga says.

The final challenge of heat dissipation was the most troublesome for uOne’s tests and missions in the Mediterranean, where waters can reach 30 C in the summer and cause internal heat to build up to the point where AUV computers and sensors approach their upper temperature limits of about 80 C.

Outside such conditions, heat dissipation tends not to present a fundamental problem within the two-hour mission timeframe (with the surrounding ocean otherwise functioning well as a heat sink for the AUV’s acrylic body), but to widen the uOne’s operating envelope, uWare plans to switch from the acrylic hull to an aluminium one in the near future, providing even more thermal conductivity, and hence heat dissipation from hull to water.

“We plan on attaching the various computers’ heat sinks to the aluminium frame to cool those through conduction, as water will be flowing near the watertight enclosure,” Arteaga adds.

Hull and payload

While some commentators have told uWare that the uOne’s hull shape is not hydrodynamic, Arteaga responds: “It’s irrelevant to optimise hydrodynamics at the speeds we move and with the range of movement in different directions we have. If all we wanted was to move forwards fast, we would’ve done the same torpedo shape that almost every AUV has. That philosophy runs throughout the inside and outside of our hull: there is no one perfect solution that is going to solve every problem this AUV weathers.”

Instead, the issues prioritised in the structural design include protection, stability and modularity. Protection encompasses everything needed to prevent saltwater corrosion to the internal subsystems. To that end, the internals are stored as indicated within a watertight enclosure, which is currently made from cast acrylic (also known as poly(methyl methacrylate) or PMMA) and will be manufactured from aluminium in the future. Some PETG (polyethylene terephthalate and glycol) components are also used as non-watertight elements, such as the thruster and payload mounts.

“It is worth noting that the aluminium hull won’t have any significant effect on mission runtime, be it in terms of increased weight or reduced heat build-ups, but in addition to functioning in warmer waters it will also be able to go deeper and use newer payloads, like aluminium diving lamps that are rated to 300 m depths,” Arteaga notes.

Stability comes largely from the thrusters, but their symmetrical arrangement around the hull (and the placement of the battery in the middle) ensures good balance of mass and buoyancy across all orientations.

For modularity, the hull’s watertight enclosure is surrounded by a frame that includes rails for attaching external payloads. These rails enable multiple different sensor arrangements, with one of the most complex including three cameras mounted on arms extending in different directions from the AUV’s undercarriage and one camera on the top side, with further payloads on the sides (as tested by uWare for a project requiring multi-angled underwater vision).

Computing edge

Keeping the uOne small and easily one-person portable has limited the quantity of computers and processing power that uWare can fit inside, so mitigating heat dissipation has been crucial to avoid thermally overwhelming these systems.

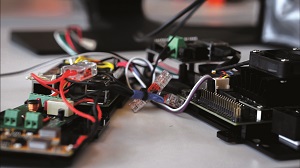

The main control board features two computers. A Raspberry Pi 4 (RPI4) is used as the main control and navigation computer, responsible for the execution of navigation and decision arbitration algorithms, while a NVIDIA Jetson Orin Nano is used for the execution of the machine learning and vision algorithms, for which access to an embedded GPU is required.

“The decision to use commercial computers such as the RPI4 or NVIDIA Jetson platforms originates from the idea of keeping things simple and focusing on the most critical aspects related to autonomous underwater navigation, as well as leveraging our internal knowledge of optimised software development for embedded platforms,” says Arteaga.

“Moreover, while using RPI computers was not considered a professional approach a couple of years ago, this is not the case anymore. Nowadays, those platforms offer the ideal trade-off between computing capabilities and power consumption for all mobile robotics projects.”

The main control board features a plethora of input buses to take data from the onboard camera systems, pressure barometer, DVL and IMU. Although an input port for a ranging sonar is also present, the stereo vision camera has effectively replaced sonar in uWare’s obstacle-avoidance approach. The IMU is mounted on a separate board, along with a 5 V power-distribution module and an I2C switchboard.

I2C and UART are the main communications interfaces used between the main computing unit board and the rest of the subsystems. While the Jetson Orin and RPI4 are connected via ethernet, some sensors and actuators – such as the barometer, DVL, IMU, thruster-control system and cameras – are connected using either I2C, UART, MIPI CSI-2 or USB.

“If the Jetson Orin should stop functioning mid-mission, the RPI4 can still continue. For instance, if the AUV has a preprogrammed survey path, it can still autonomously follow that using, for instance, the bottom camera or any external payloads for viewing and recording pipelines or other infrastructure below. But it will try to send the operator an alert via the acoustic modem that says it no longer has its machine vision and asks if they want it to continue the mission or return to the point of deployment,” says Arteaga.

An additional board mounts the company’s proprietary acoustic modem electronics, as well as a 12 V power-distribution board. The last board is responsible for motor control, mounting eight electronic speed controllers (ESCs) – one per thruster – and a power input from the battery, as well as data input from the BMS.

Unique touches

Although uWare has sought commercial off-the-shelf (COTS) components to avoid reinventing the wheel where possible, a few components have been made in-house ad-hoc to fit the uOne’s evolving needs. For example, its own voltage regulators were developed after going from four to eight thrusters to increase current throughput by 5x without incurring the expense of a market-ready component capable of doing that.

A similar approach has been followed for the creation of a proprietary acoustic modem. “Currently, the most affordable acoustic modems cost a couple of thousand dollars and their specifications are not aligned with our requirements,” Arteaga explains.

“They’re built to send tiny packets of data over multiple kilometres, sometimes up to 10 km, and we don’t need that kind of transmission distance in a short-range AUV. We therefore built one that costs just a couple of hundred euros, with a maximum range of just 2 km and increased data rate.”

Additionally, uWare plans to “replace” the cables running between the boards with a single, unified, printed circuit board (PCB), which embeds all the components of the four boards mentioned, improving the overall speed and reliability of all the data interconnections, and hence functions, while optimising the space available in the watertight enclosure.

Track and sense

The uOne’s IMU is a COTS component from Bosch, which integrates an accelerometer with a Zero-G Offset of -150 to +150 mg and a noise-density output of 150-190 μg/√Hz, as well as a gyroscope with a Zero Rate Offset of -3 to 3°/s and 0.1 to 0.3°/s noise output, along with a magnetometer with a Zero-B Offset of 40 μT.

Its DVL, supplied by Water Linked, integrates a four-beam convex Janus array transducer with a frequency of 1 MHz and a 22.5° beam angle, as well as a ping rate of 4-15 Hz. It operates within altitudes of 0.05-50 m from the seabed at a maximum velocity of 3.75 m/s.

While the uOne’s guidance and motion tracking comes from the standard combination of an IMU and DVL, for localisation, uWare has forgone beacon-based systems such as ultra-short baseline (USBL) and long baseline (LBL), as well as sonar-based simultaneous localisation and mapping (SLAM), in favour of a stereo camera-based point cloud-generation approach, which will eventually be used as a visual-inertial form of SLAM.

“To make a system that can work anywhere without any specific infrastructure, we couldn’t rely on USBL or LBL, because then you have to deploy the beacons for acoustically triangulating the AUV’s position, retrieve them afterwards, and somehow avoid all the kinds of multipathing issues that happen in ports and affect your mission-critical positioning accuracy,” Arteaga says.

“Meidi and I do have experience in SLAM algorithms, and those can be installed in and used via our Jetson Orin SoC, although camera-based underwater SLAM is unusual compared with sonar-based subsea SLAM. But, as mentioned, using cameras over sonar was eventually a no-brainer for us to detect things like cracks in pipes at shallow depths.”

While the use of cameras may raise questions over how the uOne handles poor visibility conditions, the inertial navigation typically takes over to ensure reliability when visual input is limited.

The robot can also recognise and decide when conditions prevent visual data acquisition at the standards held by industry for acceptable quality of data acquisition. In such instances, it will send a message to the user that the water quality is not ideal and it will not proceed to the data acquisition waypoints.

Additionally, the robot’s modularity allows for future enhancements, and uWare is considering the integration of sonar systems to improve performance in low-visibility environments.

Stereo vision

Initially, Arteaga and Garcia developed a SLAM algorithm for use with a single camera, which benefits from simplicity, but lacks the ability to gauge the scale of objects being viewed without complex additional motion-reference subroutines, particularly when underwater refraction can make objects appear larger than they really are.

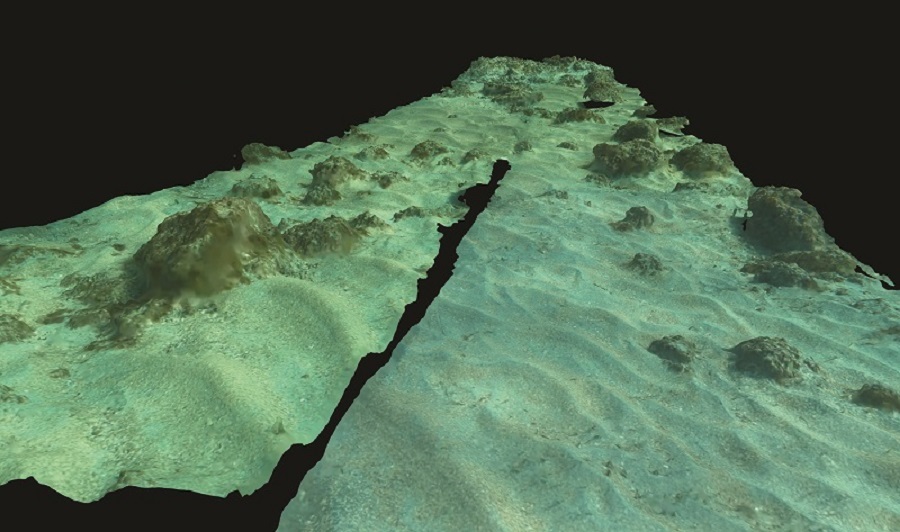

Combining this mono-camera SLAM with the IMU-DVL provided good results in tests, but it reached a limit of usefulness when trying to localise in confined areas (and the ability to survey with extreme precision inside underwater compartments was one of uWare’s key aims). Instead, the company developed its stereo vision module, through which a navigable point cloud is created in real time. This point cloud is currently used for obstacle detection and to avoid collisions by adapting the AUV’s trajectory in real time.

In future, the point cloud will be used to generate a map of the AUV’s surroundings, which will then be used to locate itself by merging that information with the IMU-DVL sensor data. At that point, the system will fit the company’s envisioned visual-inertial SLAM model.

After researching commercially available stereo vision systems, uWare purchased a module for testing purposes. After validating the suitability of the solution using that commercial module, the company decided to implement its own stereo vision module to answer its specific needs, being the compatibility with the rest of the system, an increased depth estimation accuracy and overall performance improvements in low light environments.

“Like the acoustic module, we toned down from commercial solutions to something lighter-duty and more cost-effective, so we don’t need a stereo vision module running at 300 FPS [frames per second]. It’s just unnecessary when you’re working in the slow underwater world – 10-20 FPS is plenty for the uOne,” Arteaga says.

“We then built a custom stereo camera by connecting two cameras to a computer to process and synchronise the two vision feeds. The first version performed as needed in tests, generating clouds of points for localisation. Integrated into the uOne, it runs Meidi’s object-detection algorithms perfectly: it recognises fish around the robot, as well as humans, rocks, pipes and other objects.”

The two cameras in the stereo module are spaced 8 cm apart, each featuring a 1080p resolution, a horizontal field of view (FoV) of 62.2° (48.8° vertical) and running at 5-10 FPS.

Smart navigation

Future work will aim to have the AI recognise further actionable details of the objects that the uOne views, enabling smarter and more precise navigation around obstacles or closer photography of cracks in infrastructure.

Garcia explains: “Computer vision has been of interest to us since starting the company, with the initial aim of getting an AUV to follow a diver underwater. But it would’ve taken a highly customised implementation of computer-vision algorithms to detect and track a diver, and I think we were running everything on a Raspberry Pi 2 or 3 at the time.

“We started by using traditional computer-vision algorithms, like tracking pipes based on detecting contours and trying to recreate the shape of the pipe, and those can work, but they’re difficult to generalise, which you need to do if your AUV is going to successfully inspect all kinds of pipes everywhere.

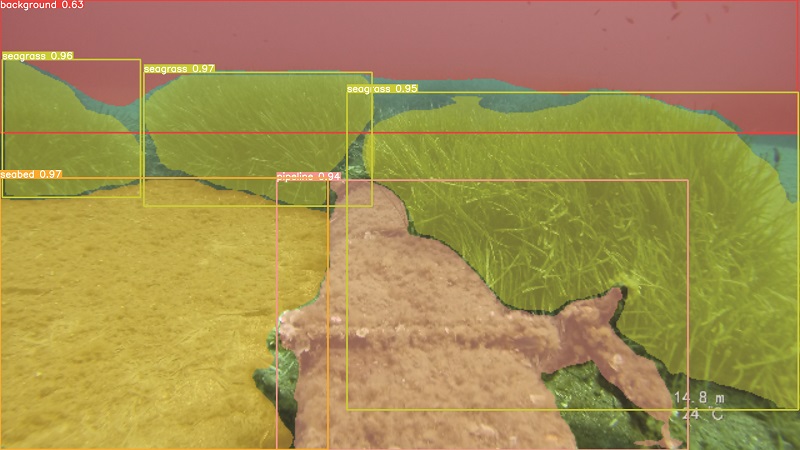

“Luckily, the longer the company existed, the more underwater imagery we could collect, both ourselves and from partner organisations, until eventually we were able to transition into machine-learning models for recognising not just pipes but other things like different species of seagrass, which is important for some prospective customers, like environmental groups,” Garcia adds.

Visual-inertial fusion

Combining the stereo vision with inertial and DVL navigation enables the uOne to localise itself similarly to a human walking through a large field, approximating its position and avoiding collisions via visual references of its surroundings and inertial references of its trajectory taken through them, with the visual navigation also helping to correct IMU drift, as a global navigation satellite system (GNSS) would.

“Vision-inertial fusion also enables it to hunt intelligently for visual tags, such as identification markers unique to one specific ship parked among many others, or physical changes in a pipe’s support structures, compared to what the uOne viewed in or around each support structure the last time it inspected them,” Garcia notes.

“If it views those sorts of changes, it can stop and take hundreds or even thousands of closer-up pictures of them at varying angles in order to produce a better 3D reconstruction of that spot in particular. That could be a crack in a pipe support that has grown over time, or a piece of coral in a reef that has bleached or been damaged.

“Those are the sorts of things that, historically, only human divers could be trusted to do intelligently, so over time, the way operators plan missions with our robot will be like they’re interacting with a more and more experienced diver, giving it gradually fewer, more high-level commands and letting it make its own decisions.”

Powerful thrust

In the eight-vectored thrust configuration, all eight thrusters tend to be active across most of the uOne’s movements, which contributes to its ability to move in all degrees of freedom.

Arteaga comments: “We’re happy with the Blue Robotics thrusters. We might not use them forever; we might look into hubless thrusters as those are less vulnerable to debris, ropes or seagrass tangling in the props, but as we have eight thrusters we have really good propulsion redundancy.

“Once, we were testing the uOne and two thrusters started malfunctioning post-launch, but the control system just ran the remaining six thrusters well enough to compensate. It had to zig-zag a little during some movements, but it still carried out the mission just fine, and our next-generation PCB layout will give even better individual control of each thruster, including shutting off malfunctioning thrusters to reduce the extent of compensatory thrust needed.”

Although the ESCs running the Blue Robotics thrusters in the uOne produce 25 A at maximum thrust, they can also create severe spikes in current when the robot brakes or changes direction. Such is the scale of these spikes that other onboard components (including the main computer) could be starved of current without adequate power management in a phenomenon known as voltage dip (British English) or sag (US English).

“That’s why we have dedicated 5 V, 12 V and other power-distribution systems to make sure everything is getting the minimal amount of power needed to operate,” Arteaga says.

“Each thruster can draw up to 25 A of current, and we have eight, so up to 200 A, theoretically. In practice, the robot never maxes all thrusters simultaneously; good surveys require either very slow speeds or complete stops.”

High currents

The power-distribution and ESC PCBs have been designed in-house to handle such high-current throughput. Additionally, the BMS measures the current drawn and output to monitor and regulate power, so voltage dip and other unwanted power-distribution conditions are avoided. The battery is a 4s pack, much like typical UAV packs with 16 Ah of capacity, which supplies power at 14.8 V and recharges over two hours.

“We can operate up to three hours on that 16 A of energy, much of which comes from optimising our power-distribution system. It helps that the uOne is neutrally buoyant, so we’re not constantly using power to actively push it down or up when we just need it stationary,” Garcia adds.

Future improvements to the BMS will enable recharging of the battery without removing it from the AUV, although battery swapping is expected to remain a popular option with users, as each swap takes around 10 minutes (divers, by comparison, need 30 minutes above water to physically recover between dives).

“One survey day, we used a uOne to map a hectare of seafloor, and that took three trips with two battery swaps; hence, only 20 minutes of downtime for 10,000 m2 of real photogrammetric data, which was beyond where humans or ROVs could go alone,” Arteaga notes.

Data links

The RPI4 communicates with the acoustic modem via UART; the latter broadcasting high-level operations telemetry via binary frequency shift keying (BFSK) and receiving commands if necessary, also via the BFSK protocol.

“That’s a very basic protocol, sending 0s and 1s, but we’ve designed the acoustic modem to be built on top of that to improve on basic BFSK little by little, with advanced algorithms for features like noise reduction, error correction, multipath mitigation and increased bandwidth, which the modem will embed in its dedicated computer for receiving and formatting information,” Garcia says.

Similar in nature to a radio modem, the acoustic modem uses an analogue-to-digital converter, along with an arrangement of filters and amplifiers to retrieve signals received by the acoustic transducer and deconstruct them into digital signals to be interpreted and used by the main computer via UART.

“We don’t depend on constant acoustic communication between the AUV and user – it’s really just there to ease the user’s mind. If they’ve never worked with AUVs before, it can really stress them to just sit there for two hours not knowing if the robot is still operating properly,” Arteaga says.

“Instead, every 30 seconds they get an update, basically reporting all systems are working okay and a very brief summary of what the robot is doing; and if something like a subsystem goes wrong or an underwater object is missing or really damaged, we want the user to be alerted immediately via a short message.”

Hence the acoustic modem enables an uplink for persistently streaming small packets of rudimentary but lengthy information, as well as a downlink for very short, one-off command packets from the user (so the user’s choice of ground-control station (GCS) must have a transducer and acoustic modem connected). The comms algorithms include error-correction subroutines and encoding protocols for outgoing messages to compress the information as much as possible for further comms efficiency.

“It’s an open channel, so the AUV is constantly listening – no excessive energy consumed to do so – and when specific commands from the user are received, saying maybe, ‘give me a full status update’ or ‘change your action’, the onboard modem and computer both execute the command, and answer back an acknowledgement,” Arteaga says.

Shore and cloud

Persistent mission monitoring is enabled via an IP68-rated topside computer offered by uWare, which links to a modem and a transducer in the water. It can stream mission and vehicle information to the user’s device via 2.4 GHz or 5 GHz wi-fi. Or it can come with a screen, keyboard and mouse if the user would rather use that computer as a GCS.

As well as receiving the aforementioned updates, users can plan and monitor the mission via a GUI running locally on their device, although uWare notes that its topside computer is small enough that some missions have been launched and concluded offshore from paddleboards (eliminating the need for even a small boat).

The front end of the GUI closely resembles UAV planning software, with top-down GNSS map views for creating survey routes and geofences, including heights above seafloor, approximate depths, spacings and orientations, with in-built wizards that make recommendations on such parameters to the user before the plan is uploaded to the uOne.

Once the mission plan is uploaded, the user receives estimates of the mission length and battery energy consumption, and potential alerts of how far the uOne will get before needing to return for a battery swap. It can then be placed in the water – 2 m deep and 1 m in front of the user is recommended – and it will wait there until the user commands it to start the mission.

During the mission, all data is stored inside the uOne in a solid-state drive (SSD) device and can be retrieved by wi-fi or wired ethernet (or by retrieving the SSD) between missions. Post-mission, the data can be downloaded to the user’s device and sent to uWare’s cloud storage server (with around 100 GB of data collected during longer missions).

“The cloud storage server connects with two other cloud-based servers. One generates visualisations for users, building 3D models, GNSS-estimated orthomosaics and those kinds of valuable data products, and also applying deep-learning algorithms for analytics to highlight, plus geo-tag cracks, seagrass, underwater ruins and other features, which users might find important,” Garcia says.

“The user can access and download all of those freely, along with all the stereo and bottom [camera] video captured, graphs of water pressures and temperatures recorded over time, and so on.

“The other is an internal server, dedicated to building our machine-learning pipelines, through which we further refine our models, not just for smarter vision but for features like navigating more efficiently amid strong currents or thick seagrass.”

Data within days

Processing the data via the cloud can now be accomplished within a couple of days (compared with weeks in some cases), although uWare plans to offer faster processing times in the future.

“Programming cloud functions isn’t very different to programming a robot. You’re just dealing with another level of communication and different constraints. In the robot we have limited resources, but with cloud providers we can just spin new instances as we go,” Garcia explains. “That means we’ll face different pricings, but at [the] day’s end it’s all about programming processes and data exchanges carefully.”

Arteaga adds: “Coming from robotics, we tend to think in different abstraction layers. We think of modular systems and subsystems, electronic parts talking with mechanical parts, and software running in the middle. A cloud isn’t too different: a server runs software, which talks to another software program on another server. We, as engineers, can be agnostic to what we’re building, programming and optimising; if enough information is successfully coming through, that means it works.”

Into the future

With the uOne now at commercial technical readiness, uWare is focused on advancing the intelligence of its AUV and data analytics.

“It might sound strange, but we’re starting to discover a new world of possibilities of what we can do with the data that we and our users gather, and bringing robots like ours to different users around the world is honestly going to open something of a new era of working and intervening underwater,” Arteaga says.

“That’s going to bring a lot of benefits for safeguarding oceans and coasts against damage from wars or human-made accidents, and tracking the effects of climate change to plan disaster-response efforts and marine restoration activities.”

Specifications

- uOne

- AUV

- Battery-electric

- Cast acrylic hull

- Dimensions: 50 x 40 x 40 cm

- Maximum weight in air: 18 kg

- Payload capacity: 5 kg

- Maximum speed: 3 knots

- Operating speed: 0.5 knots

- Maximum endurance: 3 hours

- Maximum environmental operating temperatures: 0-35 C

Some key suppliers

- Thrusters: Blue Robotics

- Watertight enclosures: Blue Robotics

- Barometer: Blue Robotics

- Data processing and logging computer: NVIDIA

- Main navigation and control unit: Raspberry Pi

- IMU: Bosch

- DVL: Water Linked

- Transducer: DSPComm

- Bottom-looking camera: DeepWater Exploration

UPCOMING EVENTS