Sense and Simulation

Quantum computing and virtual worlds are helping to test the performance of driverless vehicle sensor systems, writes Nick Flaherty

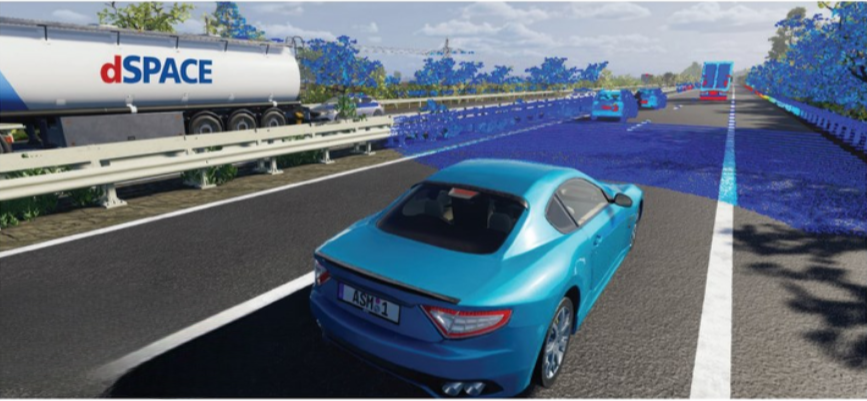

A wide range of testing and simulation strategies are being used to develop and evaluate autonomous technologies. Building virtual words that are an accurate representation of reality, and populating them with accurate representations, helps to test the performance of driverless vehicle sensor systems.

Computational fluid dynamic simulation tools are used to improve the design and development of uncrewed aircraft, testing system designs and components before they are built, and providing helpful data much earlier on in the design process.

Then, there is also the challenge of ensuring the models are accurate, and the virtual systems are good representations of the physical ones. The virtual models can then be gradually replaced using hardware in the loop (HiL) until the system is ready.

Simulation technologies are also evolving, adding generative artificial intelligence (genAI) to create complex test scenarios from a simple text prompt, and quantum computer algorithms that can develop new algos that dramatically accelerate the performance of aerospace radar and communication systems.

Digital twins

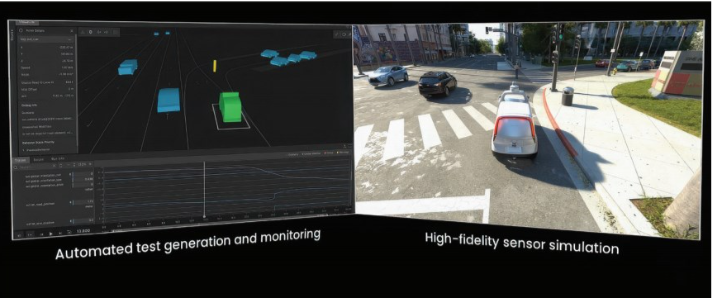

Creating a purpose-built autonomous vehicle (AV) simulation platform is not a simple undertaking. Previous simulation systems have used game engines to provide a virtual environment, but this has not been enough to build scientific, physically accurate and repeatable simulations.

So platforms are being designed and built from the ground up to support simulation across many GPUs to provide physically accurate, ray-tracing rendering. As the performance of GPUs increases, larger environments can be displayed or more sensors included.

AV simulation can only be an effective development tool if scenarios are repeatable and timing is accurate. The digital-twin tools can schedule and manage all sensor and environment rendering functions to ensure repeatability without loss of accuracy to handle detailed surroundings and test vehicles with complex sensor suites. The tools can also manage these workloads slower or faster than real time while generating repeatable results.

In addition to accurately recreating real-world driving conditions, the simulation environment must also render vehicle sensor data in the exact same way that cameras, radars and Lidars take in data from the physical world.

The digital twin also has to work with more sophisticated simulation tools, particularly solvers for computational fluid dynamics. These tools can model an entire vehicle and dive down into the different components, and understand drag and range. The models could be as simple as a spreadsheet or full-blown 3D systems.

This is particularly popular with battery pack developers for simulating thermal runaway. Developers run a bench test and use that data to input into the modelling software. This could be as simple as a look-up table, but it could be replaced with full details of all the chemistry if test data is not available.

One advantage is that solvers are moving to add GPU acceleration, which is seeing an increase in performance of about 600% when moving from running on a processor to a GPU. These solvers are also using machine learning (ML) and even genAI in the optimisation software for mapping heat, building response surface models and design exploration. This helps to predict the next design changes.

This is automating the building of force maps for aerospace, capturing the control surface deflections, and building a whole model of the aircraft to put the data into the control system algorithm.

This enables a full wind-tunnel test in a simulation to test many parameters that couldn’t be achieved in a physical scenario. It generates the responses that could occur in a control algorithm for the extreme attitude and forces acting to provide the control algorithm with the model, so before a first prototype flight you gain the basis for flight testing.

However, all of this complexity increasingly requires an ecosystem of tools that can interact, so the applications programming interface (API) of the tools is key (see below). Computational Fluid Dynamics (CFD) is coupled to the structures, design and mission profiles, so a wide range of tools need to interact effectively and accurately.

Rendering

Validating automated driving software requires millions of test kilometres. This not only implies long system development cycles with continuously increasing complexity, but it also brings the problem that real-world testing is resource intensive, and safety issues may also arise.

Closed-loop evaluation requires a 3D environment that accurately represents real-world scenarios. One of the key aspects is to create a 3D environment that is as realistic as possible so that a digital twin can be a virtual environment in which to perform tests. This has moved from 3D artists creating high-density maps with stationary objects to a variety of locations and scenarios.

The locations are brought into the virtual scenes using neural reconstructions with neural network technologies such as NeRFs (neural radiance fields), and 3D Gaussian Splatting provides scalability.

A NeRF is a neural network that can reconstruct complex 3D scenes from a partial set of 2D images. Images in 3D are required in various simulations, gaming, media and Internet of Things (IoT) applications to make digital interactions more realistic and accurate. The NeRF learns the geometry, objects and angles of a particular scene, and then it renders photorealistic 3D views from novel viewpoints, automatically generating synthetic data to fill in any gaps.

How to combine this with the simulated assets is not so easy to solve. One idea is the neural render API to create a combined 3D environment and spawn variations, along with data such as distance to the objects to give the opportunity to modify the situation.

Another algorithm called General Gaussian Splatting Renderer combines speed, flexibility and improved visual fidelity. Rather than using polygons to draw an image this uses ellipses that can be varied in size and opacity to improve the quality of the virtual environment.

However, the original algorithm’s approach to the Gaussian Splatting projection introduces several limitations preventing sensor simulation. This limitation originates from the approximation error, which can be large when simulating wide-angle cameras.

There are other challenges with these neural rendering approaches, such as inserting out-of-distribution (i.e., previously unseen) objects into the 3D environment, and artifacts or blurs may affect the appearance of dynamic objects. Geometric inconsistencies can also arise, mostly with depth prediction.

Implementing this neural rendering early on in the sensor simulation pipeline provides a versatile extension by keeping as many of the original features as possible. This improves the integration of the camera sensors, and other raytrace-based simulations such as Lidar and radar. An inability to support other sensor modalities is one of the biggest issues with most neural-rendering solutions applied in autonomous driving simulations.

Rebuilding the algorithm from scratch to work with existing rendering pipelines allows the tools to assemble images from various virtual cameras that may be distorted. This enables the digital twin to simulate high-end sensor setups with multiple cameras, even with HiL. Because of the generality of the algorithm, you can get the same results consistently from sensor models that use raytracing, such as Lidar and radar.

This improves runtime performance as the renderer remains fast enough to work at a real-time frame rate of 30 frame/s and can also be used in HiL systems.

Developers can move around freely with the camera and use different positions or sensor setups in the simulated scenario without unpredictable artifacts or glitches. It lets developers get up close and personal with intricate details for all kinds of objects and surfaces. The number of applications can be increased even further, as the algorithm can be used in physical simulations or even for surface reconstruction, including potholes in the road.

While 3D environments can be built manually by 3D artists, they have limitations in scalability and addressing the Sim2Real domain gap. Gaussian rendering can also be significantly faster. For example, creating a virtual area of San Francisco could take 3D artists a month and a half, while it’s just a few days with neural reconstruction. That’s the big advantage: a 20 times faster production rate.

The sensor models used in a virtual environment need to be flexible, handling different lens parameters for cameras, and rotating or stationary flash Lidar.

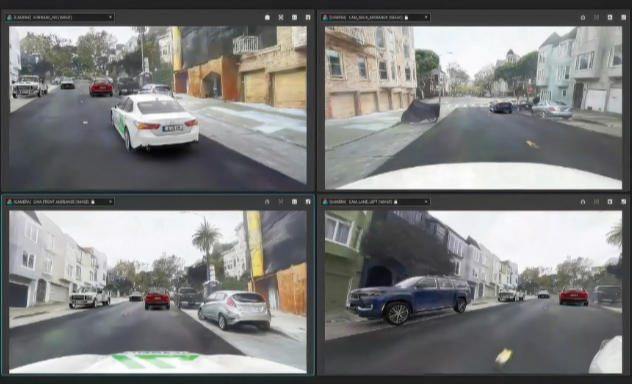

Here, it’s the complexity that is the challenge. Four cameras with suppliers’ models is not a problem, but combining the data from 12 cameras, five radar and one rotating lidar with a map of the environment is where the challenges enter.

One way to tackle this is to use a multi-threaded approach to distribute the computation for all the sensors over multiple GPUs, which is not trivial for simulator developers. While 20 sensors on one GPU would be very slow, five to eight will provide real-time performance.

Vital APIs

The APIs for the models are vital and increasingly have to take distributed GPUs into account.

The application-programming interfaces address the critical need for high-fidelity sensor simulations to safely explore the various real-world scenarios that autonomous systems will encounter.

CARLA, for example, is an open-source AV simulator that is used by more than 100,000 developers. APIs allow users to add high-fidelity sensor simulation from the cloud into existing workflows.

It has been developed from the ground up to support the development, training and validation of autonomous driving systems. In addition to open-source code and protocols, CARLA provides open digital assets (urban layouts, buildings, vehicles) that were created for this purpose and can be used freely.

The simulation platform supports flexible specification of sensor suites, environmental conditions, full control of all static and dynamic actors, map generation and more.

The API allows users to control all aspects related to the simulation, including traffic generation, pedestrian behaviour, weather and sensors.

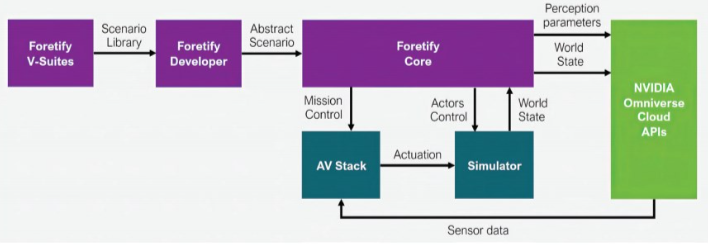

One platform uses proven coverage-driven methodologies for the verification of driverless cars. The tool generates the myriad tests required to cover all relevant scenarios that may be encountered in the real world. Scenarios are defined using ASAM OpenSCENARIO 2.0, a human-readable, scenario-description language that has been recently adopted as an industry standard.

Adding genAI

AI-based Simulation Predictor and Reduced Order Modeling software boost the development of digital twins.

The AI Simulation Predictor uses ML with built-in accuracy awareness to help finetune and optimise digital twins by tapping into historical simulation studies and accumulated knowledge.

This comes as Siemens teams with Amazon to provide the PAVE360 tool in the latter’s cloud for building digital twins of cars using ARM CPU models.

One of the most significant challenges in AI-powered simulation is AI drift, where models extrapolate inaccurately when faced with uncharted design spaces. To address this, the Simulation Predictor introduces accuracy-aware AI that self-verifies predictions, helping engineers to conduct simulations that are not only accurate but also reliable in the context of real-world industrial engineering.

For example, thermomechanical fatigue predictions have been upgraded to process approximately 20,000 design elements in only 24 hours, yielding a 20% improvement in the life of components and saving over 15,000 hours of computational time.

Reduced Order Modeling software for high-fidelity simulation and test data trains and validates AI/ML models. By training AI/ML models on comprehensive datasets, this technology enables engineers to gain robust, reliable and trustworthy insights, helping to eliminate the common issue of AI drift.

This can accelerate simulation models to the point where a detailed fuel-cell plant model runs faster than real time with the same accuracy as a full system model. This enables activities such as model-in-the-loop controller development and testing to be done faster, shortening the overall development cycle by around 25%.

A highly accurate digital model of an expansive network of roads in Los Angeles, California is supporting the development of automated driving technologies. The virtual environment enables engineers to conduct extensive simulation testing of AVs before progressing to the public road.

The Los Angeles model features a 36 km loop, making it one of the largest public road models developed to date. It is navigable in both directions and offers engineers an extensive real world-based environment for conducting comprehensive testing. Capturing the intricate layout of the city’s roads, the model includes diverse configurations such as highways, split dual-carriageways and single-carriageway sections.

The digital model has been created using survey-grade Lidar scan data to create a vehicle dynamics-grade road surface, which is accurate to within 1 mm in height across the entire 36 km route.

The digital twin features 12,400 buildings, 40,000 pieces of vegetation and more than 13,600 other pieces of street furniture, including street lights, traffic lights, road signs, road markings, walls and fences. All of these objects have been physically modelled with appropriate material characteristics, which is critical for testing sensor systems.

The model provides OEMs with the ability to thoroughly train and test perception systems in a safe and repeatable environment before correlating these simulated results on the public road for use by vehicles in real-world scenarios.

This provides a better understanding of what happened in that particular scenario, and allows new perception systems and control algorithms to be tested exhaustively in the same situation before deploying them on a vehicle.

It also incorporates aspects that challenge automated driving technologies, such as roadside parking, islands separating carriageways, drop kerbs in residential areas, rail crossings, bridges, tunnels and a large number of junctions with varying complexity.

GenAI in the cloud is also being used for scenario generation alongside HiL systems. A large language model (LLM) and retrieval-augmented generation (RAG) architecture allows developers to create a vast number of complex and realistic scenarios easily, including edge cases, simply by entering a textual scenario description.

This follows the ASAM OpenSCENARIO standard, so automotive manufacturers and suppliers can simulate and validate autonomous driving systems.

Test system

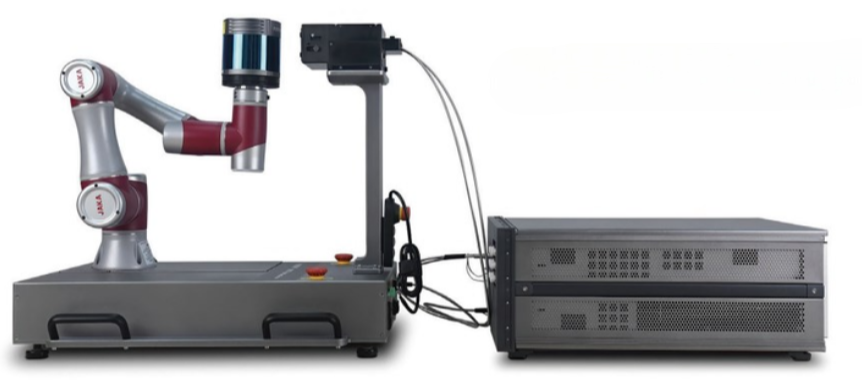

The Lidar Target Simulator (LTS) enables manufacturers (of vehicles and sensors) to test and validate Lidar sensors for AVs. It simulates test targets at a defined distance and reflectivity with a compact and standardised bench setup. This is designed to simplify and accelerate the testing, validation and production of automotive Lidar sensors through standardisation and automation.

The compact bench setup saves test floor space by simulating target distances from 3-300 m and simulating surface reflectivity from 10-94%, and the test software generates insightful analytics by sweeping target distance and reflectivity, enabling design and performance improvements.

The LTS test setup is fully automated using a collaborative robot, or cobot, which provides precise device movement for field-of-view testing, the automation software accelerates testing and throughput to support volume production.

Validation

Wind tunnels are used to validate computational fluid dynamic (CFD) simulations with real-world data; a key step in the design and testing of uncrewed systems. By comparing simulation results with actual flight data, developers can refine their designs with greater confidence.

In-house simulations evaluate loading conditions to ensure probes can withstand the environments that UAV developers meet during testing. This is integral to the development cycle, where testing and simulation work hand-in-hand to validate and finetune designs.

This ensures platforms are supported from the earliest stages of development through to production from wind-tunnel testing and test-flight booms to engine inlet testing, enabling companies to streamline the testing and validation process. Incorporating synthetic data into simulations, and combining it with real-world testing, helps to optimise performance while reducing development time and costs.

Simulations using thermal analysis, and structural and vibration analyses on the probes show how the components meet the system requirements, loading conditions, and thermal and aerodynamic loading ahead of building the probe.

Quantum

The next generation of quantum computers is set to provide better performance, opening up more complete system simulation.

For example, researchers now estimate that a large-scale, jet engine, computational-fluid dynamics simulation, which took 19.2 million high-performance computing cores using classical algorithms, can be conducted using a quantum computer with 30 logical quantum bits (qubits). These 30 qubit machines are currently in development.

After conducting 100,000 experiments, the researchers showed the large-scale CFD simulation of a jet engine can be achieved with only 30 logical qubits on a quantum computer, leading to better accuracy and efficiency, and lower costs than current methods.

This would provide large-scale CFD simulation and flow analysis for every engineer once quantum computers become readily available. This is particularly important for UAV designers as it will enable CFD engineers to simulate a full aircraft for the first time, allowing aerospace engineers to greatly improve flight patterns during turbulence.

Even with the current growth in supercomputers, simulating an entire aircraft with classical computing will not be possible until 2080.

For the research, a Hybrid Quantum Classical Finite Method (HQCFM) physics-based solver simulated a non-linear, time-dependent Partial Differential Equation (PDE) from four qubits all the way to 11 qubits. The accuracy and consistency were comparable to classical computers, while the HQCFM did not propagate any error to the next time step. This is a significant breakthrough towards more complex simulations beyond the capacity of classical devices.

The physics-based solver can also be used to solve other PDEs to capture interactions in gas dynamics, traffic flow or flood waves in rivers. Combined with quantum algorithms, the technology can accurately solve complex equations.

Another project involves developing quantum algorithms capable of accelerating the simulation of aerospace equipment, such as radar or telecoms antennas. These algorithms would run on the same Fault Tolerant Quantum Computer (FTQC) systems to accelerate electromagnetic simulations, opening the door to new optimisations of airborne equipment designs.

The results of the electromagnetic simulation will then be tested on airborne equipment, such as radars and antennas, to estimate the exact number of qubits needed to significantly improve performance.

The convergence of digital-twin and high-performance simulation tools is speeding up the development of complex hardware and software for uncrewed systems, whether in the air or on the ground. The next generation of fault-tolerant quantum computer systems promises to take this forward with exponential improvements in simulation performance to find new ways of creating better sensing and communications systems.

Acknowledgements

With thanks to Nathaniel Varano at Aeroprobe, Ray Leto at TotalSim and Daniel Tosoki at aiMotive.

Some examples of simulation tool suppliers

- FRANCE

- AVSimulation +33 1 4694 9780 www.avsimulation.fr

- GERMANY

- dSPACE +49 5251 1638 0 www.dspace.com

- HUNGARY

- aiMotive +36 1 7707 201 www.aimotive.com

- INDIA

- Wipro +91 80 4682 7999 www.wipro.com

- ISRAEL

- Cognata +1 855 500 0217 www.cognata.com

- Foretellix +972 584 347475 www.foretellix.com

- USA

- Aeroprobe +1 540 443 9215 www.aeroprobe.com

- Ansys +1 724 746 3304 www.ansys.com

- BosonQ Psi (BQP) – www.bosonqpsi.com

- CARLA – www.carla.org

- Emerson/NI – www.ni.com

- Nvidia+1 408 486 2500 www.nvidia.com

- OPAL-RT Technologies +1 514 935 2323 www.opal-rt.com

- Siemens EDA – www.plm.automation.siemens.com

- TotalSim +1 614 255 7426 www.totalsim.us

- Voyage (Cruise) +1 206 623 1986 news.voyage.auto

UPCOMING EVENTS