Kelvin Hamilton

Twin turbo

Flare Bright’s CEO tells Peter Donaldson how the company solves UAV flight control issues at high speed using a machine learning digital twin

(Images courtesy of Flare Bright)

Artificial intelligence that learns by trial and error ,much like a human toddler, and the virtual twin of a real-world UAV, combine to develop flight control software automatically at unprecedented speed using tiny data sets. That is the capability that Flare Bright is developing in its Machine Learning Digital Twin (MLDT)system, according to CEO Kelvin Hamilton.

Born in the mid-1970s in a small village in mid-Wales, Hamilton recalls being impressed by Cold War jets roaring overhead in the low-flying training area there, particularly Vulcan bombers, which constituted his introduction to aviation as a boy. An education in electrical and electronic engineering led him initially into underwater robotics, but these days he runs a company dedicated to making UAVs safer and optimising their performance using MLDT technology.

Flare Bright runs sparse flight test data and environmental measurements through its own software. “First, we use the drone’s flight characteristics that we calculate from a few seconds of flight data, which is a big advantage over most AI, which requires huge data sets,” Hamilton says.

“Second, we factor in wind and environmental influences. We don’t need complex fluid dynamic models here, because we throw every possible scenario at the situation and, more or less, cover a year’s worth of flight tests in a simulated environment in a few hours.

Key to autonomous flight

He emphasises that ML unlocks the potential of modelling and simulation in the development of complex technologies. “ML is fantastic at optimising in a huge solution space, which a human brain simply cannot cope with,” he says. “To understand that space and train drones to fly safely is the key to unmanned and autonomous flight.”

Flare Bright uses many different types of ML, their selection depending on the problem to be solved and most being optimisation techniques. “Knowing which types of ML to use, and which data sets to throw at them, is one of our key skills,” Hamilton says.

“What we avoid is the need to use lots of computing power and large training data sets. We optimise using the data we create, and use edge computing [laptops and desktops] to ensure our code is hyper-efficient, meaning that it solves problems in as few steps as possible.

”The use of digital twins is increasingly common for product development, because it can avoid the costs of building physical prototypes. Their application (in conjunction with ML) to creating flight control software is challenging though, because it has to run very fast to achieve worthwhile savings in time and money – ideally orders of magnitude faster than real time, Hamilton says. It is here that the ability to write ‘hyper-efficient’ code becomes essential.

Back to efficient code

He points out that in the early days of personal computers, their relatively meagre processing power and memory made it essential to write efficient code, but the art has faded as computing power and software complexity have grown.

“Because software is so complex now, most people don’t write efficient code,” he says. “What they do is write blocks of code, and then if you have to go through that block 10 times in order to achieve the answer it doesn’t matter, because everything runs so quickly. So efficiency is not something that coders have really been taught properly for 20-odd years.”

Hamilton points out that the company’s CTO, Conrad Rider, is a former champion at solving Rubik’s Cube, with a particular knack for solving it in the fewest moves.

“That same sort of thinking is how he implements the software architecture and code,” Hamilton says. “That is part of the DNA of all our software engineers now, and we assess new recruits on their ability to learn these techniques rapidly. Rider wrote the entire company software ecosystem from scratch to be optimised for ML.”

Solving problems in as few steps as possible is directly relevant to writing efficient code that can characterise a UAV using a minimum of real-world data to create its digital twin, then have the computer run through a very large number of scenarios in which it learns by trial and error to reproduce the behaviour of the real aircraft, checked periodically against flight test results.

Hamilton emphasises that FlareBright’s software needs relatively very small amounts of real-world data, which he refers to as “ground truth”, on which to build. He says, “Most AIs need a lot of ground truth, a huge data set of, say, 100,000 pictures of a bridge and100,000 pictures of a pylon, tasking the computer with looking at them all and working out which are which. It will get better and better at that through reinforcement learning.”

Tiny data sets

“Our data sets when we were developing our aircraft were six one-second flights,” he says. “That’s all the data we need because it gives us a good enough approximation for our model to throw every scenario we can imagine at it, and because it works so quickly, we can do that overnight.

“We can run hundreds of thousands of scenarios with different wind vectors, angles of flying through the wind, strengths, gusts and so on. From the sensor feeds on the aircraft we’ll know enough in those six one-second flights to know roughly how it flies. Then the digital twin tunes and optimises the flight control program by getting it closer and closer to the way it thinks the aircraft should fly.

“Normally we get it pretty much right– over 90% – first time. It’s not always perfect, but then we can use the process again. That means we are saving about90% of costs and time on any standard flight test programme.”

Accelerated development

Hamilton says MLDT can accelerate development times by a factor of 10,and also produces some remarkable capabilities almost as a by-product.

“For example, using our MLDT we can sense the wind in flight without using pitot tubes or any other direct measurement systems,” he says. “In turn, that allows the crucial wind modelling needed for flightpath planning in complex urban areas to be verified by every drone that flies, which in turn allows optimal paths to be flown. The paths themselves can be optimised using MLDT, taking into account not just the wind around buildings but accurate capabilities of each drone.”

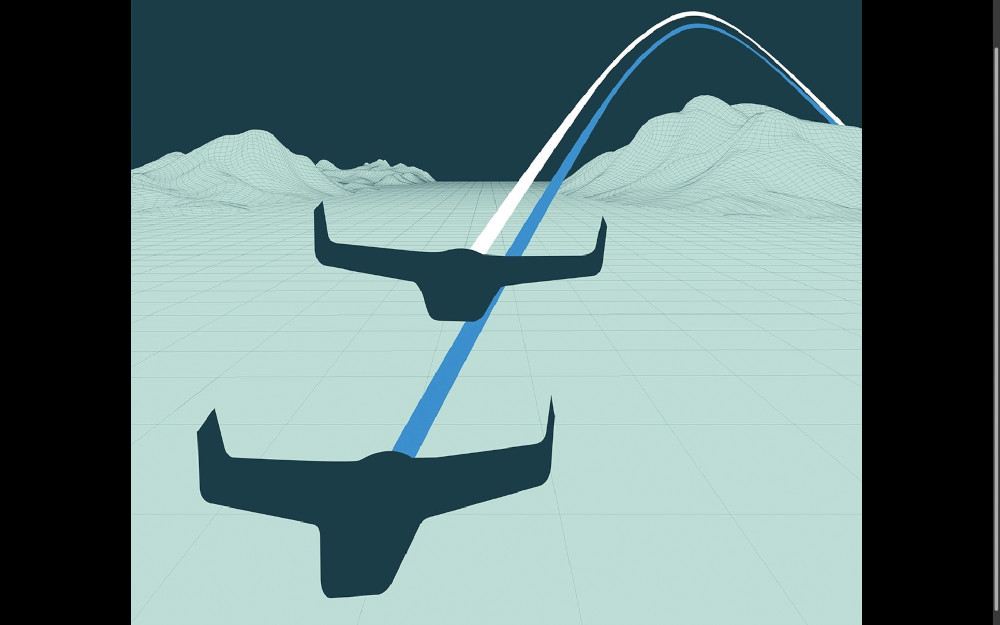

This path optimisation process can yield some counter-intuitive results, he adds, citing a flight profile that the computer was optimising for maximum time spent observing a selected target before re turning the UAV to the launch point.

“What the MLDT gave us resembled a handbrake turn at apogee,” he says. “Our initial inclination was to reject it because it looked wrong, but it actually worked brilliantly. We just never thought of sacrificing the effectiveness of the control surfaces for a brief instant, even though the aircraft had enough momentum to keep turning. It achieved the objectives better than anything we would have chosen.”

Learn like a toddler

Hamilton likens the difference in the ways the MLDT and other AIs learn to the difference between the strategies of a toddler and those of a teenager. A teenager will learn by reading books, he says, whereas a toddler learns by experimenting.

“The teenage way of learning is the way most neural networks work at the moment,” he says. “They take in lots of data; it’s like doing endless sine and cosine calculations until you’ve trained your brain how to do them. A toddler will try things out, sitting and standing in different ways, falling over and trying something else. They are open to any idea – much to any parent’s horror!

“We learn like a toddler. We don’t need to know particular physics and aero dynamics rules; what we need to know is whether it works. As our MLDT progresses, it is getting better and better at understanding what works and what doesn’t.”

The system’s judgement about whether a particular combination of control surface movements works or not is based on whatever parameters it has been told to optimise, as measured and fed back by the UAV’s suite of a dozen or so onboard sensors and control surface actuators.

“For each data point the sensors provide, we’ll know what the aircraft is doing, and the digital twin will know the ground truth of what’s going on in each of those scenarios,” Hamilton says.

“We sample at a very high rate, in the hundreds of hertz, so even in those one-second flights we get enough data from the sensors without needing to use computer capacity in the cloud to run it all.

“We’ll test the drone in the real world, and if the AI is giving spurious results, that’s fine, we put in the results from the real world into the machine. It will then run those hundreds of thousands of scenarios again.

“The right answer is what happened in the real world – that’s the ground truth –so the AI accepts the results that come closest to that and discards all those that are farthest away, so the machine learns as it goes.”

GNSS independence

A high-fidelity digital twin of an aircraft, coupled with ML, inherently has some predictive power over its behaviour. FlareBright has exploited that to constrain the errors in low-cost INSs to provide small UAVs with a useful degree of independence from GNSS. That enables them to tolerate loss of a signal for longer and compensate for spoofing, for example.

As Hamilton explains, “Large INSs a re-established, but are heavy, expensive, consume lots of power and, owing to the laser gyros cannot easily be miniaturised. Lots of academics and start-ups have tried to perfect a chip-based INS, but the navigation error typically runs away exponentially after a few seconds.

“We have constrained the error to a linear function using our MLDT techniques, and are achieving a few minutes of accurate inertial navigation in a low-power credit card-sized chip based on a Raspberry Pi CPU.”

Constraining the growth in a navigational error from the exponential to the linear is a big deal, so it is worth digging into the idea a little further.

“Typically, for every second of flight the error is going to grow a bit more until it runs away exponentially,” Hamilton notes. “In most academic flight control departments, they will manage 10, maybe15 seconds before the thing is just too inaccurate to be useful. But because we are putting a layer of ML in the digital twin, every time there’s an error we can calculate what the maximum error should be, and then we have it constrained.

“So we know in any millisecond how big the error can be and what the compounding effect will be, and therefore we can strip out all the nonsensical results that show paths that the aircraft will just never fly. The navigation error grows all the time – you can’t do anything about that – but we manage to keep the growth linear, so it gives us a lot longer before we lose too much accuracy to the drift.”

He adds that knowing the maximum distance the aircraft could be from the position the navigation system calculates is very useful for anti-spoofing, for example.

“If you say, ‘OK, we’ve flown for 5minutes, the maximum we can be from our point is 10 m let’s say, but our GPS is showing that we are 50 m away from where it thinks we should be’, there has either to be an error in a sensor or you are being spoofed,” he says. “In defence scenarios, and increasingly in civil ones, that is going to become a big issue.”

Flare Bright’s work in this area has attracted funding from the US DoD and the UK MoD.

Complementing VSLAM

This ability to constrain navigation errors also makes MLDT a useful complement to other GNSS-independent techniques, such as Visual Simultaneous Localisation and Mapping (VSLAM).

“I have spent many years working with VSLAM, and the main problem is making them robust with the processor capability available,” Hamilton says. “They can also be quite flaky in challenging situations. Our system could kick in when VSLAM is struggling to find ground truth.

“We look at it as a full stack of navigation sources. If you have an average drone, you start off flying on GNSS, and that gives you a reasonable degree of accuracy. Then, typically, if you need more accuracy or are worried about GNSS interference, outages, jamming and so on, you need an extra system, and the first one you add is likely to be some sort of VSLAM.

“Generally, they are tried and tested, and work well in certain scenarios and conditions, but they become flaky and fail in others. If you’re in fog or a snowstorm or flying over a lake or a desert, you’ve got no reference points so you can’t rely on VSLAM.

“As the next layer in the stack, we will kick in and give you that extra 1 to 5 minutes of accuracy you need while your VSLAM system is trying to pick up reference points and understand where it is again.

“We’re working on a product now that combines all those systems into one, with ML being used to boost overall performance.”

Hamilton argues that MLDT could make an important contribution to the certification of UAVs for operation in national airspace. “We are about to begin a UK government future flight programme where we are focusing on demonstrating how our models can assure the safety of other drones by accurately predicting what they are going to do in every scenario,” he says. “That could be part of the safety case a regulator would use.”

Kelvin Hamilton

Now in his mid-40s, Kelvin Hamilton enjoyed computer science and physics at school, but left at15 – “It just wasn’t for me,” he says – to start work in an electronics factory, from which he did day-release courses to earn ONC and HNC qualifications in electrical and electronic engineering, working his way up to becoming an r&d technician.

A degree in those subjects from Cardiff University followed, leading to a PhD in autonomous systems pursued in the Ocean Systems Lab at Heriot-Watt University, in Edinburgh. Here, lab leader Professor David Lane supervised his PhD and became a mentor. “He gave me the chance to get into autonomous systems and provided lots of good advice, as well as a great team and facilities,” he says.

In parallel with that, he developed his own simulated underwater exploration robotics project, teaching himself to program in C++ along the way and winning a small contract to build a subsea robot.

He was attracted to autonomous systems by the idea of robots’ ability to explore where humans can’t go. “My initial love was space exploration, but I realised it’s very hard to develop something and get it fielded, which is why I went into subsea,” he says.

A 20-year career followed, in which he worked for variable-speed drive developer Control Techniques and co-founded subsea autonomy specialist SeeByte.

He started Flare Bright in November 2015 as a means to explore ideas and their feasibility, preparing to grow as soon as a clear business case became apparent. “That happened in late 2017, when our CTO Conrad Rider joined, followed by our CCO Chris Daniels in April 2019,” he says.

These days, 14 people report to Hamilton, albeit indirectly, and he reports that the company is growing steadily. “Most of the company’s staff are engineers, and mostly ML software engineers trained in-house, who originally started as mathematicians and physicists,” he says. “It’s difficult to find the exact skill set so we take on people with ‘the spark’ and let them loose on what interests them while training them up.”

UPCOMING EVENTS