Event-based AI for driverless cars

(Image: University of Munich)

Researchers in Germany have developed a system for more effective image detection using event-driven AI.

Traditional cameras used by driverless cars capture images frame by frame, which can cause motion blur or loss of important details when the vehicle moves quickly or lighting is poor. Event-driven cameras use neuromorphic AI that detects only the changes in brightness at each pixel, allowing it to capture motion hundreds of times faster than ordinary cameras and function even in low light with lower power consumption.

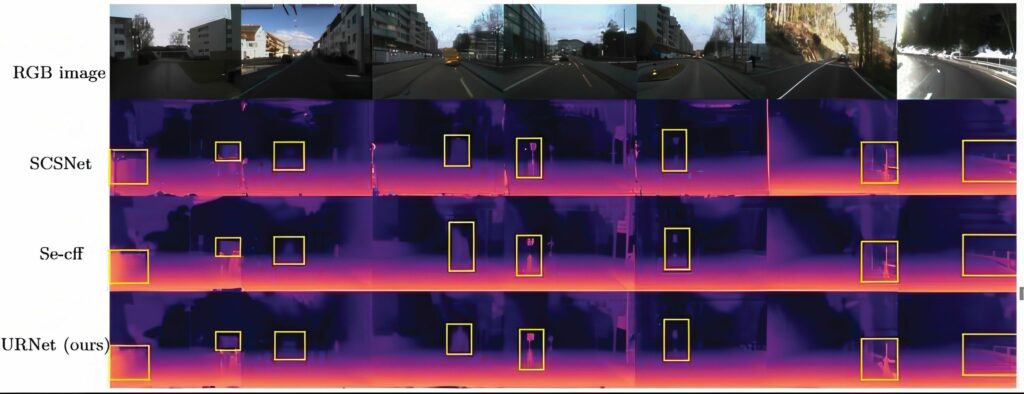

However, the sparsity and noise of event streams pose significant challenges to accurate depth prediction. The Uncertainty-aware Refinement Network (URNet) algorithm developed by researchers at the Technical University of Munich converts the event-driven output into 3D depth maps that can be used by autonomous vehicles

URNet’s core innovation is how it processes information through local–global refinement and uncertainty-aware learning. First, URNet focuses on local refinement, using convolutional layers to recover fine-grained details such as the edges of cars, road markings or pedestrians from sparse event signals. Then, in the global refinement stage, the model applies a lightweight attention mechanism to capture the broader structure of the scene, ensuring that local predictions are consistent with the overall environment.

This strategy allows the network to understand both the precise textures and the big picture of the driving scene. At the same time, URNet incorporates uncertainty-aware learning, meaning it not only predicts depth but also estimates how reliable each prediction is. For every pixel, the network produces a confidence score that reflects its certainty. When confidence is low – such as in the presence of glare, rain or strong shadows – the system automatically adjusts its response, for example, by slowing down, using other sensors or prioritising safer decisions. This built-in self-assessment makes the model more robust and trustworthy in unpredictable real-world conditions.

“Event cameras provide unprecedented temporal resolution, but harnessing the data for reliable depth estimation has been a major challenge,” said Dr Hu Cao, one of the lead researchers. “With URNet, we introduce uncertainty-aware refinement, giving depth prediction both precision and reliability.”

By combining high-speed event-based sensing with a confidence-aware learning mechanism, URNet represents a new step forward in intelligent perception for autonomous vehicles – enabling them to understand, evaluate and react to the world around them with greater safety and reliability. The technology could significantly improve autonomous driving safety, and enhance advanced driver-assistance systems and future vehicle perception platforms designed for certain lighting and motion conditions.

UPCOMING EVENTS