Davide Scaramuzza

Peter Donaldson talks to this robotics and perception professor about his work on developing vision-based navigation for UAVs

A native of Terni in the province of Umbria, central Italy, Dr Davide Scaramuzza leads a team of researchers in the Robotics and Perception Group at the University of Zurich, which is working to develop technology to enable autonomous UAVs to fly themselves as well as or even better than the best human pilots.

He and his team took a big step in that direction in June 2022 when their tiny Swift quadcopter, equipped with a camera, inertial sensors and a neural network controller, beat a group of world champion UAV racing pilots over a demanding course by half a second – a wide margin at this level of the sport and an important milestone on a development path stretching back almost 15 years.

Other milestones include enabling UAVs to perform aerobatic manoeuvres autonomously and to navigate in environments of which they have no prior knowledge. Together, these are steps towards a broader capability he calls agile navigation.

Proxies for usefulness

Dr Scaramuzza is the first to admit that performing stunts and winning races do not solve any of the weighty problems facing humanity that he really wants to tackle with the help of UAVs, but a lap time is a hard measure of technological progress, and the best human pilots are relatable comparators.

“These tasks are not very useful in practice, but they serve as proxies in developing algorithms that can one day be used for things that matter to society, such as cargo delivery, forest monitoring, infrastructure inspection, search & rescue and so on,” he says.

With small multi-copters in particular, speed is important if the UAVs are to maximise their productivity in the 30 minutes or so of flight time that their limited battery capacities allow. Also, flying a UAV as close to its best range speed as possible maximises its productivity, as Dr Scaramuzza’s team has shown.

Setting his work in context, he places it where robotics, machine vision and neuroscience meet. The spark for his interest here was struck when, in his last year at university, he attended a match in the RoboCup soccer tournament for robots. He recalls being surprised at the use of a camera on the ceiling of the ‘stadium’ to localise the robot footballers, which he regarded as cheating.

There was also a small number of robots in their own league, each of which had an onboard camera and a mirror that gave it a panoramic view, an arrangement that he looked on with similar disapproval.

“Humans don’t play like that,” he says. “What would happen if you had a camera that could only look in the direction the robot was moving? That got me interested in trying to mimic human or animal behaviour, which in turn interested me in working on vision-based navigation.”

He regards his PhD adviser, Prof Roland Siegwart, as a mentor, along with Prof Kostas Daniilidis and Prof Vijay Kumar, who were his advisers during his postdoctoral research work. One piece of advice from Prof Siegwart that has shaped his approach to his work was to start with a problem to solve, a problem that is important to industry and society.

“The research questions will come from trying to solve a difficult problem,” he says. “This is different from researchers who start instead with a theoretical problem. They are both valid, but I like the pragmatic approach more.”

V-SLAM pioneer

One such problem was enabling UAVs to fly autonomously without GPS, using sensors that are small, light and frugal enough with energy for vehicles with small battery capacities. In 2009, after completing his PhD the previous year, Dr Scaramuzza worked with Prof Siegwart and a team of PhD students at ETH Zurich on the first demonstration of a small UAV able to fly by itself using an onboard camera, an inertial measurement unit and running an early visual simultaneous localisation and mapping (V-SLAM) algorithm.

The UAV flew for just 10 m in a straight line. It was tethered to a fishing rod to comply with safety rules and was linked by a 20 m USB cable to a laptop running the V-SLAM, but it impressed the EU enough for it to fund what became the sFLY project, with Dr Scaramuzza as scientific coordinator. Running from 2009 to 2011, sFLY developed the algorithm further to enable multi-UAV operations.

“The goal was to have a swarm of mini-UAVs that would fly themselves to explore an unknown environment, for example in mapping a building after an earthquake for search & rescue.”

V-SLAM is now an established technique for UAV navigation in GPS-denied areas, with NASA’s Ingenuity helicopter using it to explore Mars, for example, and the sFLY project was its first practical demonstration, he says.

The challenges centred on the fact that V-SLAM requires the algorithm to build a 3D map using images from the camera while working out the UAV’s position on it.

“These images are very rich in information, with a lot of pixels, and you cannot process all the pixels on board,” Dr Scaramuzza says. “So we worked on a means of extracting only salient features from the images – specific points of interest. We would then triangulate those points to build a 3D map of the environment as the UAV moved through it.”

Meeting the two high-level requirements of a SLAM task – building the map and working out the UAV’s position within it – is what he calls a chicken-and-egg problem. “You cannot localise if you haven’t built up a map in time, so you have to do both at the same time.”

In V-SLAM, the overall task is divided into four component tasks – visual extraction and tracking, triangulation mapping, motion and then map optimisation. The purpose of map optimisation is to correct the errors that build up while the UAV is navigating, he says.

“We are using information from the cameras and the inertial sensors, a gyroscope and an accelerometer, which provide angular velocities and accelerations in radians per second and metres per second squared,” he explains. “This is very useful information, because a map built by cameras alone can drift over time, especially when the UAV has moved a long way from its starting point, but the accelerometer will always sense gravity when the UAV is stationary, and you can use that information to correct the drift.”

Event camera potential

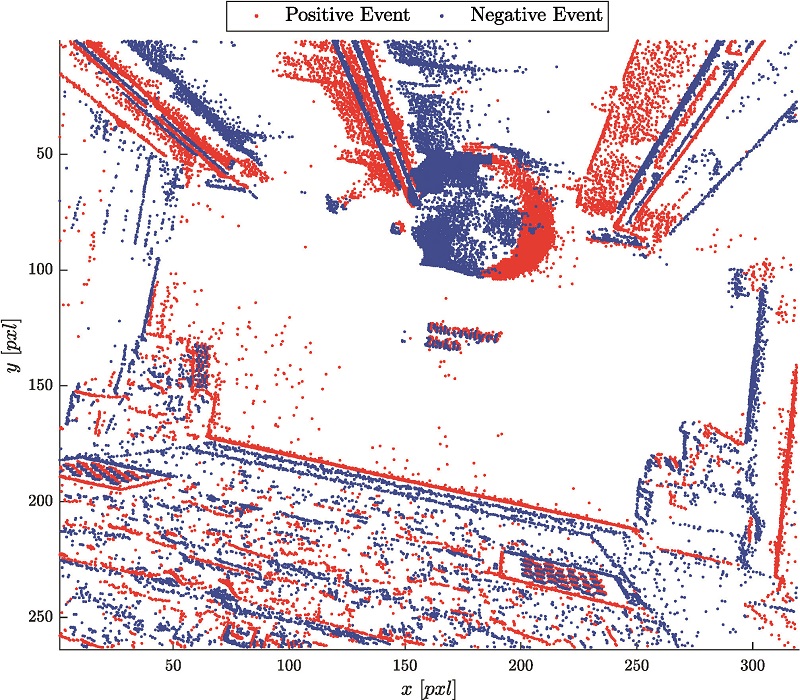

Dr Scaramuzza and his team continue to develop and refine autonomous visual navigation for UAVs, with their current work concentrating on exploiting standard as well as developmental ‘event’ cameras.

“With a standard camera you get frames at constant time intervals,” he says. “By contrast, an event camera does not output frames but has smart pixels. Every pixel monitors the environment individually and only outputs information whenever it detects motion.”

Because they don’t generate a signal unless they detect change, event cameras are much more economical with bandwidth and computing power than standard frame cameras. And because they don’t accumulate photons over time, they are immune to motion blur, he explains.

Eliminating motion blur will enable small UAVs to use vision-based navigation at much higher speeds, making them more robust to failures in the propulsion system, for example. It can even allow them to dodge objects thrown at them, he says, and these last two benefits have been demonstrated experimentally by Dr Scaramuzza’s team.

In the race against the champions, the Swift quadcopter used a conventional frame camera (an Intel RealSense D450) and an IMU, with a neural network running on an Nvidia Jetson TX2 GPU-based AI computer processing their inputs in real time and issuing the manoeuvre commands. The race took place on a course consisting of a series of gates through which the UAVs had to fly in the correct order.

Training the AI autopilot

In the Swift, the neural network takes the place of the perception, planning and control architecture that has dominated robotics for 40 years. In most UAVs that use vision-based navigation, the perception module runs the V-SLAM software.

“The problem is that it is a bit too slow, and it is very fragile to imperfect perception, especially when you have motion blur, high dynamic range and unmodelled turbulence, which you get at high speeds,” he says. “So we took a neural network and tried to train it to do the same job as perception, planning and control. The challenge there is that you need to train a neural network.”

Doing that in the real world with a team of expert pilots was deemed impractical because of the downtime for battery changes and crash repairs. So using a reinforcement learning algorithm, the Swift was trained in virtual environments developed by the games industry.

“Reinforcement learning works by trial and error,” he says. “We simulated 100 UAVs in parallel that are trying to learn to fly through the gates as fast as possible so that they reach the finish line in the minimum time. They took hundreds of thousands of iterations, which converged in 50 minutes. A year has passed since the race, and we can now do it in 10 minutes.”

Although the AI proved much faster than the human champions, Dr Scaramuzza does not yet claim it is better than human pilots, as there is still a lot to learn. For example, the AI cannot generalise its learning to transfer it to different environments and tracks the way humans can. Also, it cannot factor in risk, he says.

“Humans are very cautious because they do not want to crash often, so naturally they don’t always fly as fast as they could,” he says. That makes human operators more robust.

Faster, but not yet better

To prepare for the race, Dr Scaramuzza’s team measured the champions’ reaction times, which averaged 0.25 seconds, which is quite slow. However, human pilots rely much more on anticipation than reaction, he says.

“Humans are good at building world models to anticipate things,” he explains. “Give them an image of an environment and they can speculate what the environment will look like as they start navigating it. Machines cannot do that.”

Giving AI a comparable ability to anticipate is an important area of research, he says, and the approach is analogous to the creation of large language models such as ChatGPT. “What we are trying to develop are visual models that would allow machines to make predictions from a sequence of images.”

In developing AI-driven UAVs, Dr Scaramuzza is engaged with the ethical and humanitarian issues it raises, and consults on and runs seminars for the UN on disarmament and organisations such as Drones For Good and AI For Good.

“I would like to educate society to use AI and robotics responsibly,” he says. “We researchers have a responsibility when we publish open source code to be sure it is not directly applicable to misuse.”

He is also a keen promoter of the ‘no killer robot’ policy at UN level, wanting to make sure that in future AI doesn’t make the decision to use a lethal weapon, meaning that people remain in the decision loop. “At the moment there is no regulation, which means it’s problematic.”

Dr Scaramuzza’s lab has also generated spin-offs, including Zurich Eye which became Meta Zurich and developed the Meta Quest VR headset, and the SUIND crop spraying and forest monitoring operation in India, where they found a huge market. “They are taking technology from our lab to the real world and doing big things for good,” he says.

Dr Davide Scaramuzza

Inspired by a keen interest in mathematics, science and philosophy at high school, the Liceo Scientifico Renato Donatelli in Terni, Dr Scaramuzza went on to earn his bachelor’s degree in electronic engineering at the University of Perugia.

That led to a PhD in robotics and computer vision from ETH Zurich, and a postdoc in the subject from the University of Pennsylvania. He has also served as a Visiting Associate Professor at Stanford University, and is now Associate Professor of Robotics and Perception at the University of Zurich.

As a counterpoint to his work as a scientist, he enjoys performing magic tricks for audiences ranging from children to colleagues. “I think the connection there is to do with the fact that robots move by themselves, and with magic you make people believe in telekinesis,” he says.

“There was a trick called the zombie ball I used to do a lot for kids. The ball floats in the air, and for me, seeing a robot move by itself is like magic.”

UPCOMING EVENTS