AI certification

(Image courtesy of Ningfei Wang/UC Irvine)

Getting to trust AI

Greater use of AI in uncrewed systems demands increased certification and validation, writes Nick Flaherty

The latest developments in AI are putting more focus on how to enable transparency, validation and certification of autonomous systems operating in the air, on the ground and at sea.

While AI is widely seen as a ‘black box’ with a series of inputs and outputs with limited visibility, the end model is deterministic. According to the classification by the Federal Aviation Authority (FAA), the model is machine-learned, not machine learning (ML). Once training, testing and validation are completed in the lab, the neural network’s parameters are fixed, meaning that it doesn’t evolve or learn during operation. So, when deployed as a software component, it behaves deterministically; given the same input, it will always produce the same output.

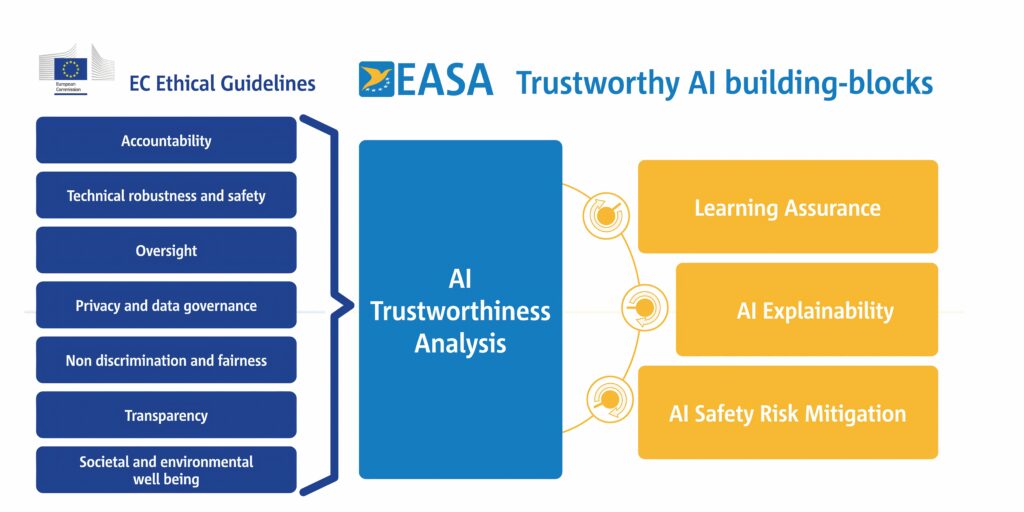

The AI Act in Europe is driving the requirement for demonstrating trustworthy AI, although the current legislation for autonomous vehicles comes under product requirements that are having to add in AI certification.

The question then is how to ensure that the machine-learned component works as intended – and what that depends on. The answer lies in using properly designed data for training, testing and validation, together with a sound learning process and a well-structured model. If any of these elements are flawed, the system can fail, but all three can be verified and certified.

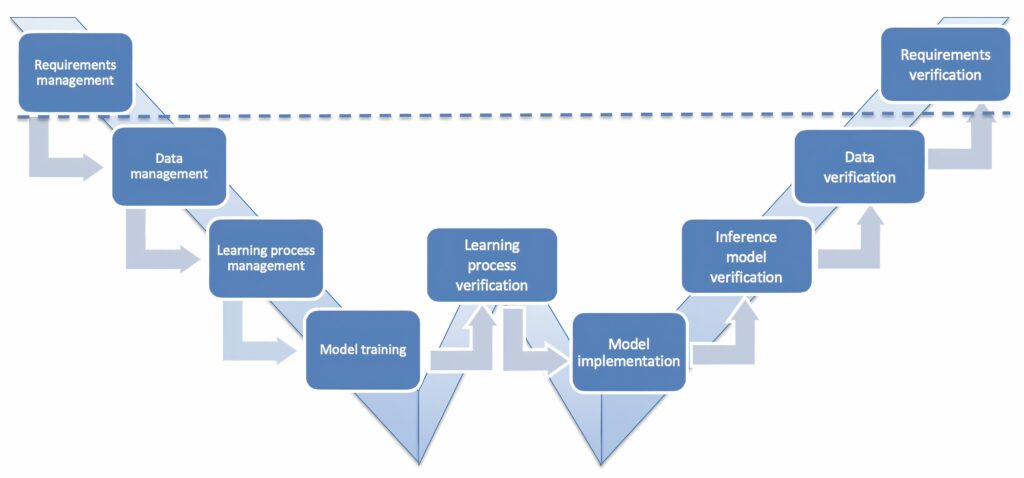

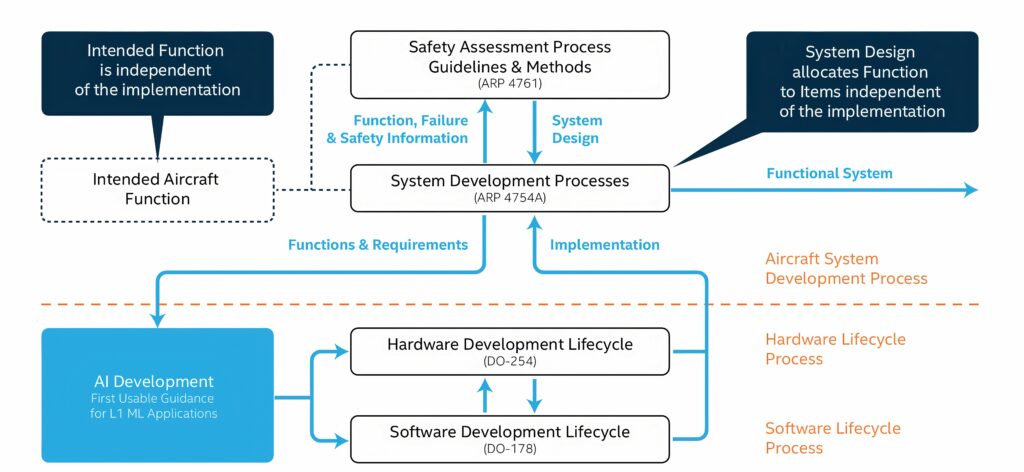

This is leading to a W-shaped development process, rather than the traditional V-shaped process.

However, recent vulnerabilities with image processing of traffic signs, as detailed below, highlight the need for more sophisticated assessment of the performance of camera subsystems, including the ML algorithms.

Aircraft

The US FAA has been working on a certification pathway for AI used in air traffic control to include uncrewed systems, and this highlights the challenges of certifying AI in aviation applications.

There is a wide range of potential usages of AI in air traffic management (ATM). The AI development process differs from typical software development, which impacts the certification of AI technologies.

The steps and considerations taken to certify AI/ML technology have not been considered from a regulatory standpoint and must be addressed before the benefits of AI can be realised. AI must be implemented in a way that is trustworthy, safe, lawful, robust, ethical and unbiased.

(Image courtesy of EASA)

The AI/ML Certification Framework focuses on the certification of new and emerging technologies under consideration by the FAA and, in particular, on AI/ML technologies. This is starting with the certification of low-risk/low-safety AI/ML technologies, and will consider the certification of any type of AI/ML-related technology and service within the FAA supporting aircraft, ATM and uncrewed components.

It will also consider the certification of AI/ML technologies that are embedded within specific AI/ML systems, assuming that the certification of hardware and non-AI/ML software components is addressed by existing certification requirements.

The Certification Framework is working on several pathways ranging in scope from new FAA-led development of AI/ML technologies from start to finish to commercial-off-the-shelf (COTS) AI/ML technologies received in near-final form. It is also covering modified COTS AI/ML technologies that have been received and altered for use, as well as previously certified AI/ML technologies now used in other systems or being updated in the same system.

Certification methodology

There are three main phases in the certification methodology. A preliminary assessment evaluates the initial usability, risk, response and safety aspects of the AI technology, together with the level of certification required, and also provides the certification pathway.

The compliance phase defines and compiles all documentation necessary for approval, and then the approvals phase coordinates with stakeholders to review and approve the documentation.

(Image courtesy of Daedalean)

The initial use case is applying the framework to prototype AI technologies for the US National Airspace System such as Runway Configuration Assistance. Air traffic controllers are responsible for determining optimal airport runway configurations and related decision-making is challenging owing to the many changing factors involved. ML models can be used to provide insights that enhance this decision-making process.

A checklist is used to ensure that the framework meets end-to-end requirements, but it does not cover the verification and validation (V&V) of specific AI/ML technologies, algorithms or models. Results of V&V activities are used to verify and validate the AI/ML Certification Framework.

Certification of AI integrated into uncrewed aircraft is another matter and this is driving the W-shaped development process.

This adds assessment of AI components alongside the V-shaped model. The W-shaped process adds a focus on data management to ensure data quality, the right amount of data (the volume) and the right type of data (the representativeness) for the system. The Model Training Verification assesses and verifies the learning process itself, not just the final model, while the learning assurance evaluates the model’s properties, performance on unseen data and efforts to avoid issues like overfitting.

This has to implement the AI model into the actual software used in real operational conditions, which means that it must function on real aviation hardware with its limited memory, stack and computational performance. The process, called inference model verification, needs to verify that this software has the same properties as those of the training model. From there, the process needs to verify that the data have been managed correctly throughout the cycle, are independent and complete, and that all the requirements set in the first step of the W-shaped process have been met.

The latest version of the Concepts of Design Assurance for Neural Networks (CoDANN) II for this certification includes the learning and inference environments and model implementation, elements for the explainability of the model that demonstrate why the model performs as it does, and a safety assessment.

GPUs

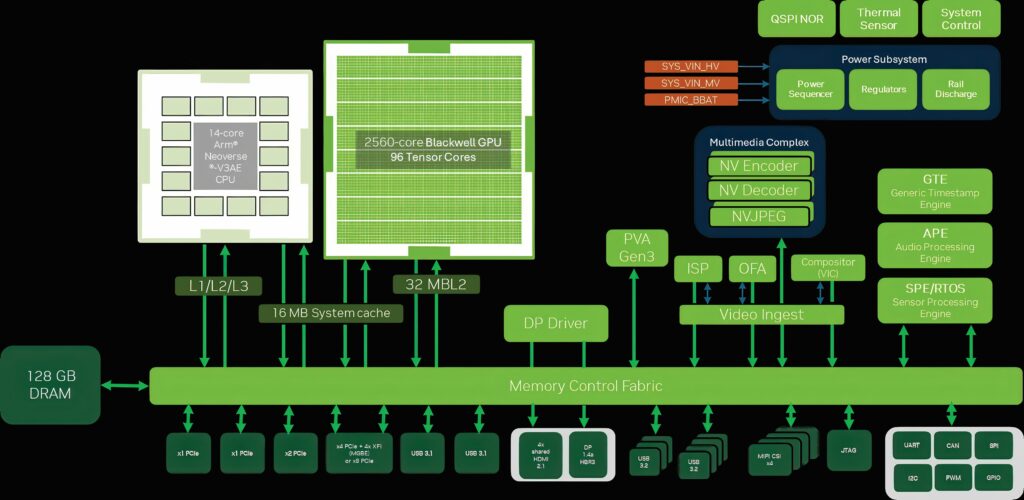

However, there are some issues with the implementation, particularly with the use of graphics processing units (GPUs) that are used to run AI models based on transformer operations. While these have been popular for large language models, they are also now being used for image processing, and so are increasingly included as part of the uncrewed aircraft system.

The current advice is to use field programmable gate arrays (FPGAs) instead, which ensure more traceability regarding the code used in such systems.

Neural-network-based products for aircraft currently require 2–5 tera operations per second (TOPS) of 8-bit integer computing power per camera, with the likelihood of this requirement increasing as new functions are added. This would necessitate usage of a modern high-end compute device, such as a GPU.

The current certification regulation process requires that the electronic component management process should also address the access to component data such as the user manual, datasheet, errata, installation manual and access to information on changes made by the component manufacturer.

For the DAL A or DAL B safety levels, the process for selecting a complex COTS device (such as a GPU) should consider the maturity of the COTS device, and where risks are identified, they should be mitigated appropriately.

GPUs are evolving fast, with a new generation of architecture being realised every 18 months. The designs are maintained by the manufacturers for several years at best and then obsoleted and replaced by vastly superior technology.

This means a design rarely has much time to mature before it is significantly altered. There is also very little transparency with any kind of errata information. Usually, proprietary software drivers are just silently fixed by the vendor or not fixed at all because the devices have reached their end of life. There is often no issue notification system because the information is considered a sensitive trade secret.

Similarly, while installation/integration manuals are usually available under Non-Disclosure Agreements, the information equivalent to user manuals or datasheets is also generally considered a trade secret.

Developers have been able to reverse-engineer one driver that was open source and, to an extent, adjust it to their needs. However, they could not obtain the necessary information to complete the work, even after signing all the necessary legal agreements.

Providing support for these components throughout the life-span of an aircraft is hard and expensive. The aviation market is still very small in terms of device volumes, so there is little economic incentive for this to change in the near future.

When a complex COTS device is used outside the device manufacturer’s specification (such as the recommended operating limits), the developer must establish the reliability and the technical suitability of the device in the intended application.

Given the complexity of these devices, this is a significant challenge because the devices may not be designed or tested for extreme temperature conditions. They may fail to operate or not behave as expected outside their tested operating conditions. Operating outside of the validated temperature range may cause hold time violations in synchronous digital circuits, leading to unpredictable behaviour.

At low temperatures, clock oscillators may also operate at unexpected frequencies or fail to start. Most modern COTS devices will require on-chip analogue circuitry for high-speed on-chip memory and networking interfaces. These circuits may fail if exposed to environmental operating conditions other than those for which they are carefully tuned.

Dynamic voltage and frequency scaling are often applied in response to fluctuations in temperature, supply voltage, current and other factors. The range and impact of these features may not be known, leading to significant unpredictable variance in timing behaviour. The latest GPUs may employ mechanisms to detect fine-grain failures and ‘replay’ or reassign failing operations, further leading to unpredictable behaviour.

The large size of the devices greatly increases the chance of partial failure, increasing costs. To mitigate this, devices may be sold with regions of the design electively disabled after production.

Modern designs are also likely to employ a complex memory system, such as a network-on-chip, to route data as packets. This combination means that two devices with an equivalent subset of the intended design, sold as the same product, may exhibit different timing behaviour, as data are routed around unused regions of the chip.

There are also issues with the microcode that drives the devices. For certification, if the microcode is not qualified by the device manufacturer or if it is modified by the applicant, there needs to be a means of compliance for this microcode.

For instance, one GPU comes with 650 KB of binary microcode. This microcode has paramount impact on the device functionality and is considered a sensitive trade secret. This microcode controls the behaviour of the power management unit and other features. The impact of the algorithms implemented in the microcode on the reaction of the device to the environmental conditions is impossible to foresee without the full cooperation of the chip maker.

(Image courtesy of Daedalean/Intel)

The verification process also includes the hardware–software interface and hardware to hardware interfaces.

There is limited information available on the hardware–software interfaces for high-performance GPU devices, particularly with multiple hardware cores, and the core partitioning characteristics are largely kept secret by the chip vendors.

An assumption in the certification process is that software applications are statically allocated to cores during the start-up of the software but not during subsequent operation.

GPUs are typically used as a coprocessor to the main central processing unit (CPU) to execute the AI models. But the GPU often has the capability to master the main memory/PCIe bus and interfere with the CPU’s access to the main memory. The initiation of these data transfers is typically controlled by the application software running on the CPU. However, sufficient information to control the bus bandwidth allocation is unlikely to be available and hardware-based mitigation outside of the GPU may be necessary to prevent this happening.

Legal requirements

In essence, the EU AI Act redefines the automotive industry’s approach to AI, emphasising ethics, transparency and safety in automated decision-making, while also influencing global standards.

In June 2024, the EU adopted the world’s first rules on AI. The AI Act will be fully applicable through 2025, with a ban of AI systems posing unacceptable risks introduced on February 2, 2025, and rules on general-purpose AI systems that need to comply with transparency requirements will also come into force. High-risk systems, which include autonomous vehicles, will have more time to comply with the requirements because the obligations concerning them will become applicable 36 months after the entry into force.

However, these are AI systems that are used in products and so fall under the EU’s product safety legislation along with aviation systems.

Autonomous and automated vehicles (AVs) in the EU are subject to a range of regulatory frameworks. Some, such as the Type-Approval Framework Regulation (TAFR) – encompassing Regulation (EU) 2018/858 and Regulation (EU) 2019/2144 – focus specifically on automotive standards, including vehicle approval.

Under the TAFR, vehicles must complete a type-approval process to ensure compliance. Additional regulations such as the General Product Safety Regulation address broader product safety concerns.

Together, these regulations ensure that vehicles meet safety, environmental and technical standards across the EU. While they cover traditional safety issues, including aspects of AI use, they do not currently explicitly address AI-specific risks.

Multiple existing legal frameworks already regulate AI use in automotive products and services. However, there is currently no regulation exclusively focused on AI but this is about to change.

(Image courtesy of NVIDIA)

The high-risk AI (HRAI) systems are those that could have serious adverse effects if they fail. These systems must meet stringent requirements for data security, transparency, human oversight and robustness, and undergo a mandatory conformance assessment with continuous monitoring throughout their life cycle.

AI’s role in controlling vehicle functions may significantly impact individual rights and freedoms. System failures could lead to accidents, property damage or personal injury.

At first glance, most AI-driven autonomous systems are expected to be classified as HRAI systems, but if they are governed by the TAFR that mandates prior conformity assessments and approval, then the AI Act won’t apply directly.

This ensures automotive-specific AI use will remain regulated primarily by the sector’s legislation, while HRAI provisions in the AI Act will serve as supplementary but overarching standards. The TAFR specifications are being revised to incorporate the AI requirements going forward and this will impact on both the OEMs and the technology suppliers. This applies to any system shipped in the region, including from China, which has been leading in autonomous system development.

However, under the AI Act’s high-risk classification, businesses must adopt extensive documentation, monitoring and risk mitigation procedures in AI development. These include rigorous testing to identify and eliminate potential biases, transparency logs for decision traceability, and comprehensive quality assurance throughout the vehicle’s life cycle. Dedicated teams including external auditors will be needed to conduct risk assessments and ensure ongoing compliance, making the Act’s requirements technically complex, costly and resource-intensive.

(Image courtesy of UC Irvine)

The practical impact on non-EU companies and international regulatory goals remains to be seen. Although the impending revisions to the EU’s legal framework are not yet fully clear, businesses should familiarise themselves with the AI Act’s requirements. With an ambitious implementation timeline, early preparation will be essential to successfully navigate and comply with these new regulatory demands.

Vulnerabilities

For the first time, researchers have demonstrated that multi-coloured stickers applied to stop or speed limit signs on the roadside can confuse self-driving vehicles, causing unpredictable and possibly hazardous operations.

The threat of low-cost and highly deployable malicious attacks that can make traffic signs undetectable to AI algorithms in some autonomous vehicles and make non-existent signs appear out of nowhere to others was considered largely theoretical. Nevertheless, both types of assault could result in cars ignoring road commands, triggering unintended emergency braking, speeding and other violations.

A large-scale evaluation of traffic sign recognition (TSR) systems in top-selling consumer vehicle brands – the first – found that these risks are not just theoretical after all, highlighting the need for rigorous risk analysis as part of a trustworthiness and certification process.

This highlights the importance of security and certification regarding AI algorithms because vulnerabilities in these systems, once exploited, can lead to safety hazards that become a matter of life and death.

The attack vectors of choice were stickers that had swirling, multicoloured designs that confuse AI algorithms used for TSR in driverless vehicles.

These stickers can be produced cheaply and easily by anyone with access to an open-source programming language such as Python and image processing libraries. Those tools combined with a computer with a graphics card and a colour printer are all that are needed to foil AI systems in autonomous vehicles.

An interesting discovery made during the project relates to the spatial memorisation design common to many of today’s commercial TSR systems. While this feature makes a disappearing attack (seeming to remove a sign from the vehicle’s view) more difficult, it makes spoofing a fake stop sign much easier than expected.

Academics have studied driverless vehicle security for years and have discovered various practical security vulnerabilities in the latest autonomous driving technology. But these studies have been limited mostly to academic setups, leaving our understanding of such vulnerabilities in commercial autonomous vehicle systems highly limited.

Marine

The OODA (Observe, Orient, Decision, Action) loop decision-making model has been in use in marine applications for decades. In a human context, assurance of the accuracy of each phase can be achieved through techniques such as observing the process and challenging assumptions. However, if one part or all of the system-of-systems is automated, assurance of the process is difficult, particularly if the OODA loop operates with AI.

The model translates well from human to machine, with automation bringing benefits of assimilation of vast quantities of data, rapid assessment and machine-speed repeatability. However, operating at machine speed, governed by AI with impenetrable algorithms and overlaid with ML, all stages of the decision-making cycle become opaque, with only the ‘action’ being observable.

Adding AI into a maritime system-of-systems, particularly in autonomous navigation and collision avoidance, means it is virtually impossible to assure each part of the OODA loop. The decision/action, which is the output of the OODA loop, can be assured through a variety of means such as repeated testing in live or synthetic environments. If the required assurance levels cannot be met, risks can be managed by limiting operating areas to account for its capabilities or requiring human oversight.

One example from a trial of an autonomous Royal Navy vessel highlights the challenge. Whilst transiting an empty Solent (UK) on a clear day at speed, the jet-propelled autonomous vessel came to an instant stop just before a helicopter at the height of 100 feet flew past the bow at 100 kts. The action of giving way to an unknown, bow-crossing AIS-fitted vessel doing 100 kts was probably correct, but it relied on interpretation of the AIS-fitted vehicle being on the surface doing 100 kts rather than being in the air.

The key to assuring the safety of an AI-enabled system-of-systems is to build trust in the system and achieve ‘calibrated trust’. This is a balance between user trust and system trustworthiness, an objective measure of how much the system can be trusted.

If it is not balanced, then there is either too much trust in the system (overtrust) or a position where it is considered that the supervisor ‘knows best’ (undertrust).

(Image courtesy of the Royal Navy)

Calibrated trust as a concept can apply to all system-of-systems. This can be achieved using a systems-engineering approach to design a set of trustworthiness categories, which can be prioritised for a system based on risk analysis. These can be assessed against known/published standards to define a Trust Quality Assessment with performance monitoring and a vital feedback loop.

Conclusion

It is still early days regarding the implementation of AI, with technological advances outpacing the ability to regulate. Certification of a system must be considered from the start of a project, with documentation and verification artefacts produced throughout. The W-shaped development process adds verification of the fixed function AI model into the certification process, providing assurance for the functionality. This is likely to restrict the adoption of self-learning architectures within an autonomous system because the function is not fixed and so cannot be certified.

There are also key component issues, particularly in UAVs. The adoption of more complex devices such as commercial GPUs for handling AI models still faces challenges regarding certification of the underlying embedded software, driving the adoption of FPGAs where all firmware can be visible and documented.

Acknowledgements

With thanks to TJ Tejasen at the FAA, Maria Pirson at Daedalean, Ben Keith at Frazer-Nash, Alfred Chen at the University of California Irvine and Thomas Kahl at Taylor Wessing.

UPCOMING EVENTS